How to improve at SQL as a data engineer

- 1. Introduction

- 2. SQL skills

- 3. Practice

- 4. Conclusion

- 5. Further reading

- 6. References

1. Introduction

SQL is the bread and butter of data engineering. Mastering SQL and understanding what can be done with it can make you a better data engineer. If you feel

Unsure about how to improve at SQL beyond the basics

That online tutorials don’t go deep enough

That you are missing some key SQL skills, but can’t quite put your finger on it

Then this post is for you. In this post, we will go over the concepts that can help you improve your SQL. Understanding, applying, and practicing these concepts can help you level up your SQL skills.

Pre-requisite: What are facts and dimensions? , sql tutorial

If you are interested in learning to write efficient SQL for data processing, checkout my e-book: Efficient Data Processing in SQL

Note checkout this repo that goes over the advanced SQL querying in detail.

2. SQL skills

As a data engineer, your SQL skills are crucial for data modeling and/or analytics tasks. We will concentrate solely on data warehouses for this post.

2.1. Data modeling

As a data engineer, you will be asked to make data available for querying. This will involve ingesting the raw data, cleaning it, modeling it, and making it available for your end-users.

2.1.1. Gathering requirements

Before designing the warehouse table(s), you should always clearly define the end objectives.

Some questions you need answered/explored are

- What does this data represent and why is it needed?

- Who is the end-user of the table(s)?

- What is the business process that generates this data? How is this data generated?

- A few (>= 3) different example queries that the end-user is expected to run?

- What is the expected number of read queries per minute?

- What is an acceptable query execution time for reading from the table(s)?

- What is the expected number of daily records?

- What is the general date range (and/or other) filters for the read queries?

- What is the historical range of data that needs to be available for querying?

Answers to these questions will determine how you model and transform the data.

2.1.2. Exploration

The next step is to explore the data, check for any data issues, validate assumptions, approximate data size growth, validate business rules, check for missing/duplicate rows on joins, etc

You will need to load the raw data into your data warehouse. There are multiple ways to ingest data into a data warehouse . For exploration, dump the data into a cloud storage system and use a COPY INTO command to load raw data into your data warehouse.

Some points you need answered/explored are

Data schema checks- Are data types consistent with the columns?

- Are column names consistent?

Data quality checks- Were all the records in the raw file loaded into the raw table? Use

wc -l input_data_file.csvto count the number of lines in the input data. - Check for absence of column values such as

NULL, null, 'null', '', N/A,etc - Do any of the column values have a field delimiter within them? Most data warehouses have options to handle these, e.g. quote_character , FIELD_OPTIONALLY_ENCLOSED_BY .

- Were all the records in the raw file loaded into the raw table? Use

Validate business assumptions- If you join this data with other business-relevant tables, do you get unexpected duplicates or missing rows? If so, why?

- If you aggregate by some id and aggregate numeric columns in a fact table, are the aggregates accurate? Or does it cause doubles/undercounting? If so, how can you prevent it?

- Does the number of rows per day (and/or other business entities) show clear patterns? (including seasonality)

- Do all the tables have a unique id?

- For every business entity table (aka dimension), is there a table that records every update made to that table?

- Be aware of values with specific meaning. E.g. sometimes -9999 (or similar) can be used to denote NULL or other values.

This will be an ongoing process. Since the data generation process upstream can change, you may find additional data issues, etc.

2.1.3. Modeling

With knowledge of the requirement and data issues, you are all set to model the end-user table(s). The standard approach is to have fact and dimension table(s) . This type of data modeling has the advantage of being able to answer most queries. The downside is that this may require multiple joins, and can be a lot of work to manage.

Some points you need answered/explored are

Naming conventions: Each company has its standard naming convention. If you don’t, make sure to establish this standard. (e.g. naming standard ).Slowly changing dimensions: Most business entity tables (aka dimensions) have attributes that change over time. Consider creating an SCD2 table to capture historical changes.In-correct aggregates: Running aggregates on any numeric values of the fact table(s) should not produce duplicate/inaccurate results. This is usually a result of having the data representing different columns in one column.Pre-aggregating data: At times, the expected query pattern requires data to be rolled up to a higher granularity. In these cases, if your read time is longer than the requirement, you may want to pre-aggregate your data on a set schedule. Pre-aggregating the data will allow “read queries” to be much faster but introduces the additional overhead of creating, scheduling, and maintaining a data pipeline.Flat tables: Although the Kimball Model is very popular, it can get tedious for the end-user to query and join multiple tables. A way for the data team to provide a clean interface for the end-user is to create a wide flat table (or view). A flat table is a table with all the facts and dimensional columns. The end-user does not need to worry about joining multiple tables and can concentrate on analyzing the data.

Note: In a flat table, if some dimensional attributes change over time, then running a group-by query on those may produce inaccurate results. You can circumvent this by having 2 tables/views one with point-in-time dimensional attributes and the other with the most recent dimensional attribute.

Read the data warehouse toolkit if you have not already. This book will help you level up your modeling skills.

2.1.4. Data storage

Storing data in the right format can significantly impact your query performance. When modeling your end-user tables, make sure to consider the impact of data storage on read-type queries.

It’s crucial to understand the following concepts.

Partitioning: Partitioning /Clustering , can significantly reduce the amount of data scanned and hence reduce the cost.Storage formats: Such as Parquet , or ORC formats can significantly reduce data size and speed up transformations.Sorting: Sorting can also reduce the amount of data to be read and make transformations efficient.Cloud storage: External tables allow for data to be stored in a cloud storage system and read when necessary.

Every data warehouse has different naming/implementation/caveats concerning the above, e.g. Snowflake automatically does most of these are for you, while Redshift requires a more hands on approach .

Read your data warehouse documentation.

2.2. Data transformation

It’s time to transform the raw data into the end-user data model. Transformations can affect

- Data processing time.

- Data warehouse cost. Modern data warehouses usually charge based on the amount of data scanned .

- Data pipeline development speed and issues.

When thinking about transformations, it’s helpful to think about the following questions:

- How will the stored data be pulled into memory (of the warehouse engine) and processed?

- How can we examine the warehouse engine plans for executing our transformations?

2.2.1. Transformation types

The ultimate goal for optimizing transformations is to reduce the movement of data within your data warehouse. Data warehouses are distributed systems with the data stored as chunks across the cluster. Reducing the movement of data across the machines within the distributed system significantly speeds up the processing of data.

There are two major types of transformations as explained below.

2.2.1.1. Narrow transformations

These are transformations that do not involve the movement of data across machines within the warehouse. The transformations are applied to the rows without having to move these rows to other machines within the warehouse.

E.g. Lower(), Concat(), etc are functions that are applied directly to the data in memory

2.2.1.2. Wide transformations

These are transformations that involve the movement of data across machines within the warehouse.

E.g. When you join 2 tables, the warehouse engine will move the smaller table’s data to the same machine(s) as the larger table’s data. This is so that these 2 tables can be joined. Moving data around is a high-cost operation in a distributed system, and as such, the warehouse engine will optimize to keep the data movement to a minimum.

When self-joining, it’s beneficial to join on the partitioned column(s) as this will keep data movement within the system to a minimum.

Some common transformations to know are

- Joins , anti joins

- String, numeric, and date functions

- Group by, aggregates, order by, union, having

- CTEs

- Window functions

- Parsing JSON

- Stored procedures, sub queries and functions

Some points you need answered/explored are

- How does transformation time increase with an increase in the data size? Is it linear or worse? Hint: A cross join will not scale linearly

- Read the data warehouse documentation to know what features exist. This allows you to go back to the docs in case you need to use a feature. Most transformations can be done within your data warehouse.

- When evaluating performance be aware of cached reads on subsequent queries.

- When possible, filter the data before or during the transformation query.

- Most SQL queries are a mix of wide and narrow transformations.

2.2.2. Query planner

The query planner lets you see what steps the warehouse engine will take to run your query. You can use the EXPLAIN command to see the query plan.

Most data warehouse documentation has steps you can take to optimize your queries. E.G. Snowflake’s common query issues , Redshift’s query plan and execution

In short

- Use explain to see the query plan.

- Optimize the steps that have the highest costs. Use available warehouse documentation for optimization help.

2.2.3. Security & Permissions

After the data asset is created make sure to grant read access to the end-user. If you have PII information in your warehouse, it needs to be anonymized. Make sure to follow the legal steps when dealing with sensitive data, GDPR, HIPAA, etc.

The access model differs across warehouses. Irrespective of what access model you choose, make sure to save the queries that grant access in git. There are also terraform providers for warehouses.

2.3. Data pipeline

This involves knowing best practices when setting up a data pipeline. See this article

that goes over best practices, naming, and data quality testing in your data warehouse and this article

that goes over CI/CD permission structure.

2.4. Data analytics

Once you have the data modeled and available to your end-users, you will get questions regarding the data and how to use it. These questions will usually fall under one of the patterns below:

- How-tos

- Why is X (not) happening?

- Why is X (not) happening despite Y?

- Is the data wrong? (This will involve asking clarifying questions to the end-user)

- Why is there a change in a certain trend?

- Why does X happen for all business entities but not for this one specific entity?

Answering these types of questions will generally involve:

- Clarifying the question.

- Validating if the claim by the end-user is accurate.

- Understanding and validating the end-user query.

- Checking if any required filters were missed.

- If the question is a how-to type, you will need to write a query.

- Checking that the data pipeline that generates the data has properly run or not.

- Investigating the data to be able to answer the question. Sometimes, there may not be enough data to answer the question. This is an opportunity to set up a process to capture that data.

You will use your knowledge of data , storage , transformation and data pipeline to answer these types of questions.

Leetcode SQL problems are a good place to practice analytical querying.

3. Practice

Deliberate practice is one of the best ways to get good at SQL.

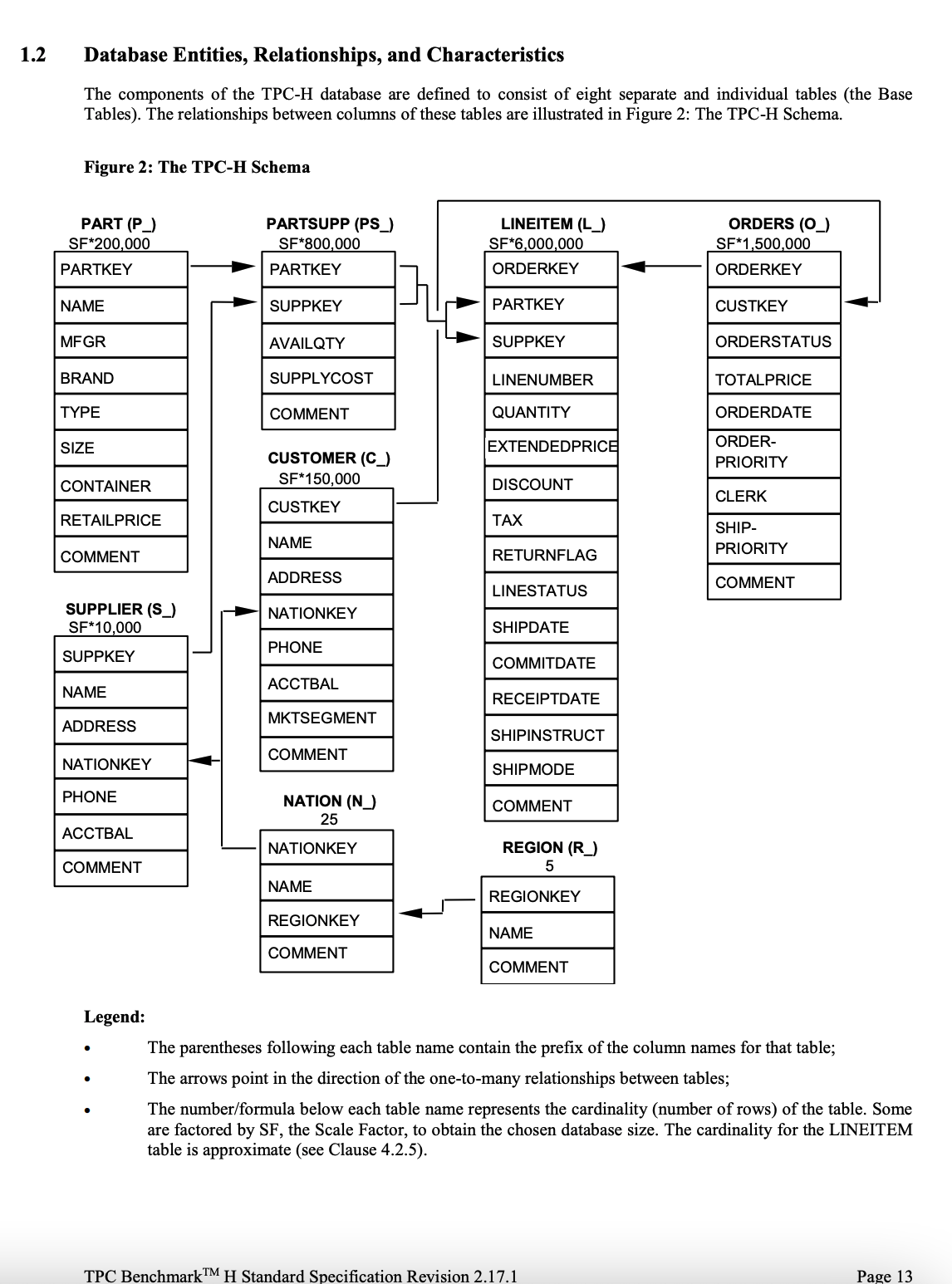

TPC-H is a popular dataset used to benchmark data warehouse performance. The data schema is shown below.

The data can be generated using the tpch-dbgen tool. You can install it and start generating data as shown below.

git clone https://github.com/electrum/tpch-dbgen.git

cd tpch-dbgen

make

./dbgen

ls -ltha | grep .tbl

/*

# 389B Oct 26 19:18 region.tbl

# 2.2K Oct 26 19:18 nation.tbl

# 113M Oct 26 19:18 partsupp.tbl

# 23M Oct 26 19:18 part.tbl

# 725M Oct 26 19:18 lineitem.tbl

# 164M Oct 26 19:18 orders.tbl

# 23M Oct 26 19:18 customer.tbl

# 1.3M Oct 26 19:18 supplier.tbl

*/

head -3 lineitem.tbl # to see the data, delimiter, etc

Note that this will generate 1 GB of data. You can generate datasets of sizes 1, 10, 100, etc. See usage docs here .

To practice your sql skills:

- Create data warehouse tables for analytical querying. With an

SLAof most reads taking less than 5 seconds. - Answer the 22 TPC-H questions and analyze their performance using the query planner. These questions can be found on page 29 through page 66 in the TPC-H standard specification .

Were you able to hit your SLA of 5 seconds for all the queries? If not, how can you? If yes, can you hit the same SLA with 10GB, 50GB, ..? Model an interesting data that you can find online.

Do the above exercise on different data warehouses to understand the similar patterns and nuanced differences.

4. Conclusion

Hope this article gives you a good idea of how you can improve your SQL skills. To recap, getting better at SQL involves the following.

Data modeling: requirement gathering, data exploration, modeling, and storage.Data transformation: Minimizing data movement within the warehouse, optimizing query plan, and managing data access.Data analytics: Understanding the data generation process, warehouse tables, business jargon, and asking clarifying questions.Deliberate practice: While we have a practice section using the TPC-H dataset, real-life data will be messy. You will have to figure out how data is generated, validate business assumptions, set up a process to capture more data, create slowly changing dimension tables, etc.

As always, if you have any questions or comments, please feel free to leave them in the comments section below.

5. Further reading

6. References

If you found this article helpful, share it with a friend or colleague using one of the socials below!

Hi there,

Great post and useful content! any further guidance and steps to build the warehouse with the data suggested for practice?

Thanks

Excellent article. Big Thanks

Thanks Joseph for another insightful article. Perhaps it would be worthwhile to also coin practicing using eg the Northwind Sample Database, AdventureWorks Sample Database, etc.

Great article. Thanks.