Data Engineering Project for Beginners - Batch edition

- 1. Introduction

- 2. Objective

- 3. Run Data Pipeline

- 4. Architecture

- 5. Code walkthrough

- 6. Design considerations

- 7. Next steps

- 8. Conclusion

- 9. Further reading

- 10. References

1. Introduction

An actual data engineering project usually involves multiple components. Setting up a data engineering project while conforming to best practices can be highly time-consuming. If you are

A data analyst, student, scientist, or engineer looking to gain data engineering experience but cannot find a good starter project.

Wanting to work on a data engineering project that simulates a real-life project.

Looking for an end-to-end data engineering project.

Looking for a good project to get data engineering experience for job interviews.

Then this tutorial is for you. In this tutorial, you will

-

Set up Apache Airflow, Apache Spark, DuckDB, and Minio (OSS S3).

-

Learn data pipeline best practices.

-

Learn how to spot failure points in data pipelines and build systems resistant to failures.

-

Learn how to design and build a data pipeline from business requirements.

If you are interested in a stream processing project, please check out Data Engineering Project for Beginners - Stream Edition .

2. Objective

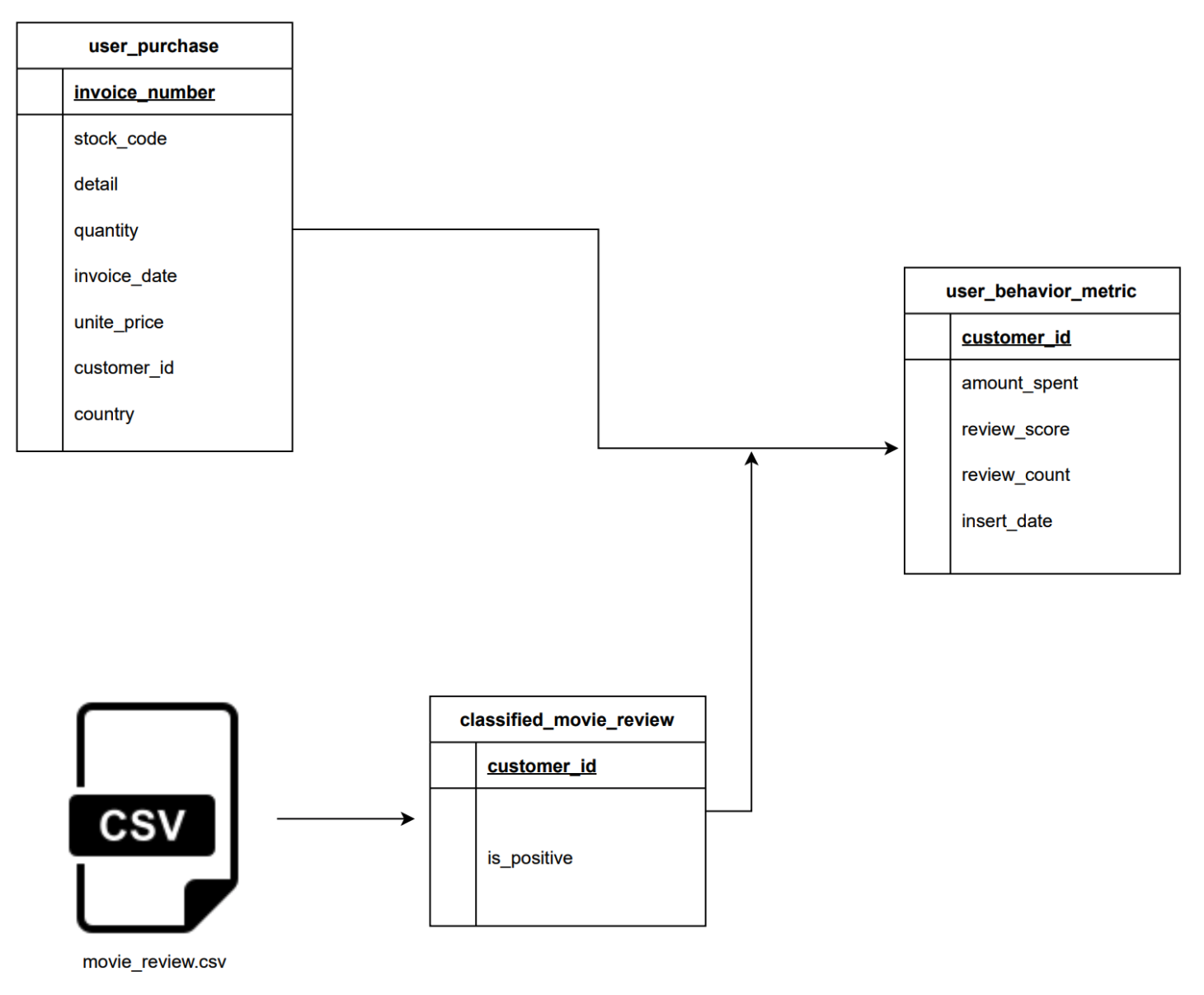

Suppose you work for a user behavior analytics company that collects user data and creates a user profile. We are tasked with building a data pipeline to populate the user_behavior_metric table. The user_behavior_metric table is an OLAP table used by analysts, dashboard software, etc. It is built from

user_purchase: OLTP table with user purchase information.movie_review.csv: Data is sent every day by an external data vendor.

3. Run Data Pipeline

Code available at beginner_de_project repository.

3.1. Run on codespaces

You can run this data pipeline using GitHub codespaces. Follow the instructions below.

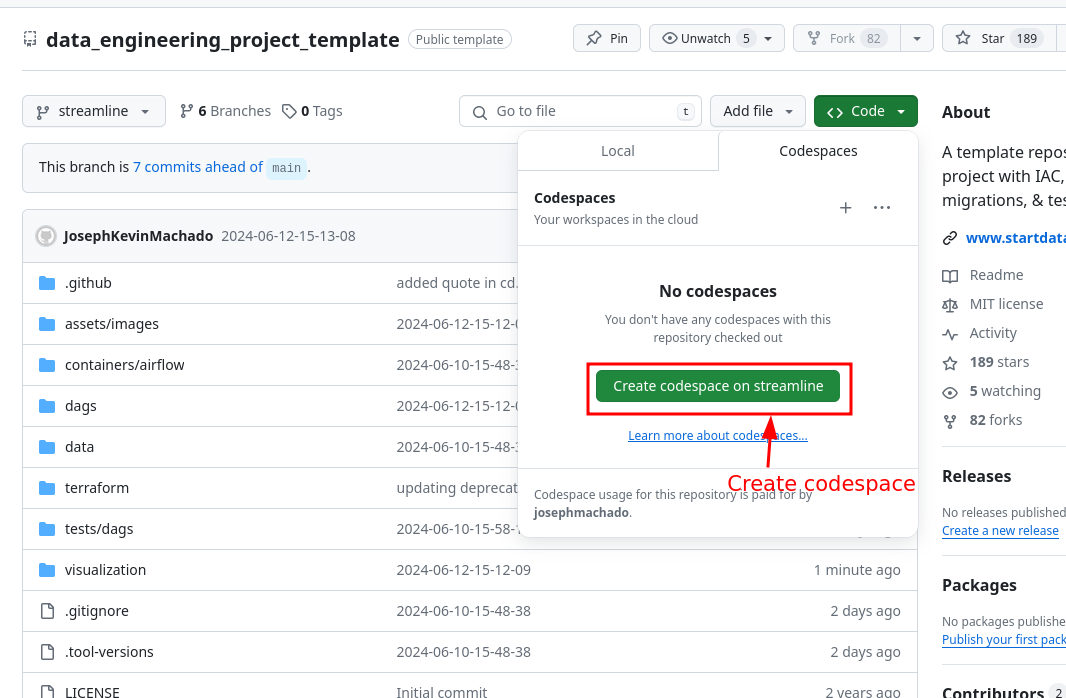

- Create codespaces by going to the beginner_de_project

repository, cloning it(or clicking the

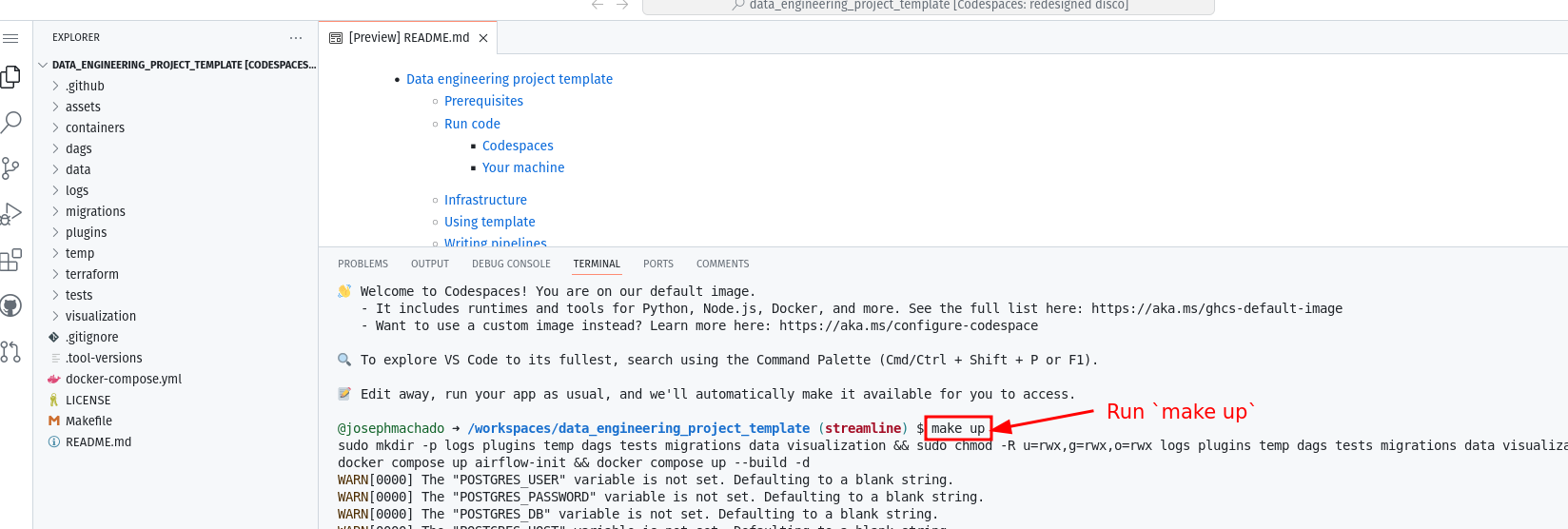

Use this templatebutton), and then clicking theCreate codespaces on mainbutton. - Wait for codespaces to start, then in the terminal type

make up. - Wait for

make upto complete, and then wait for 30s (for Airflow to start). - After 30 seconds, go to the

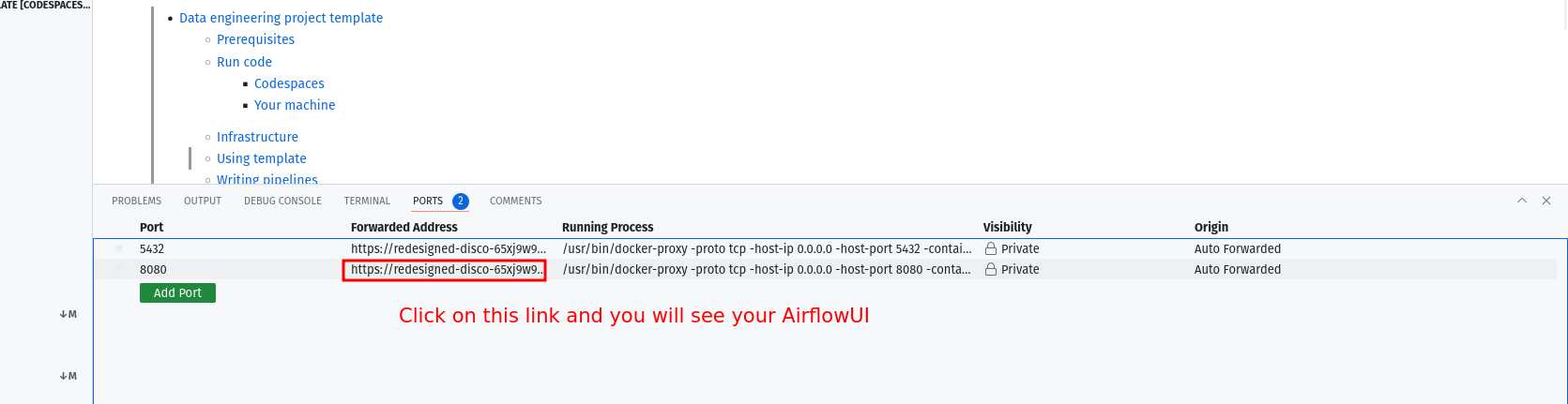

portstab and click on the link exposing port8080to access the Airflow UI (your username and password areairflow).

3.2. Run locally

To run locally, you need:

- git

- Github account

- Docker with at least 4GB of RAM and Docker Compose v1.27.0 or later

Clone the repo and run the following commands to start the data pipeline:

git clone https://github.com/josephmachado/beginner_de_project.git

cd beginner_de_project

make up

sleep 30 # Wait for Airflow to start

make ci # run checks and tests

Go to http:localhost:8080

to see the Airflow UI. The username and password are both airflow.

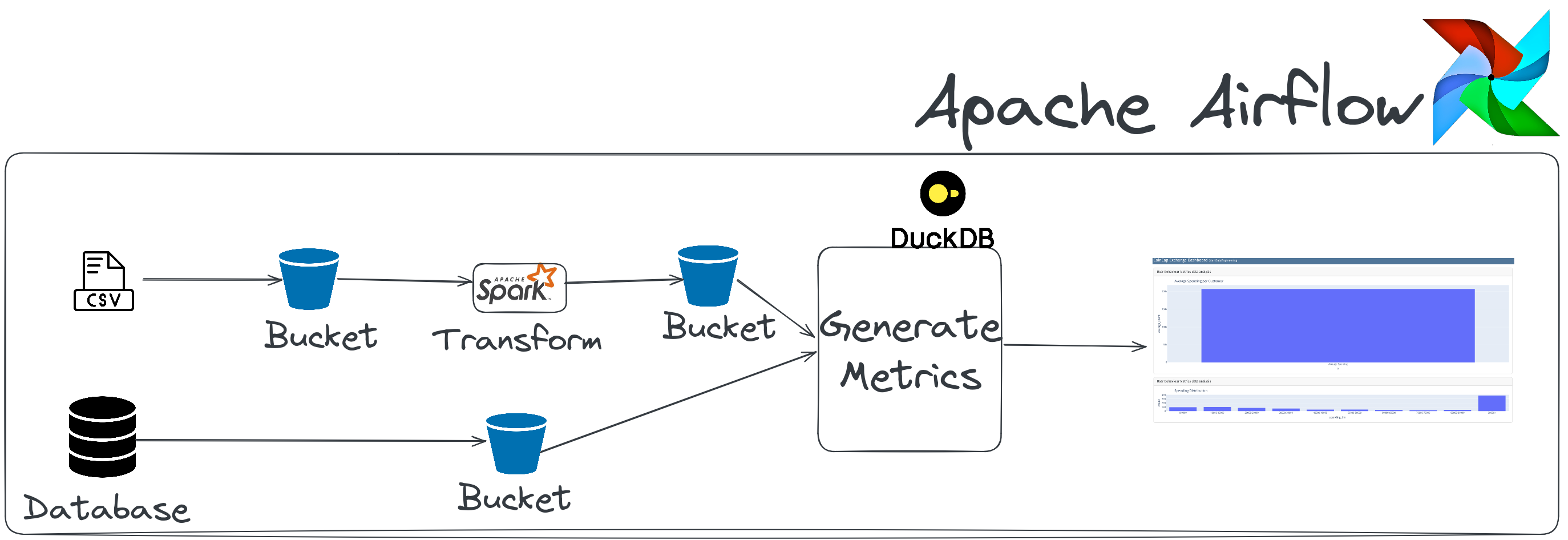

4. Architecture

This data engineering project includes the following:

Airflow: To schedule and orchestrate DAGs.Postgres: This database stores Airflow’s details (which you can see via the Airflow UI) and has a schema to represent upstream databases.DuckDB: To act as our warehouseQuarto with Plotly: To convert code inmarkdownformat to HTML files that can be embedded in your app or served as is.Apache Spark: This is used to process our data, specifically to run a classification algorithm.minio: To provide an S3 compatible open source storage system.

For simplicity, services 1-5 of the above are installed and run in one container defined here .

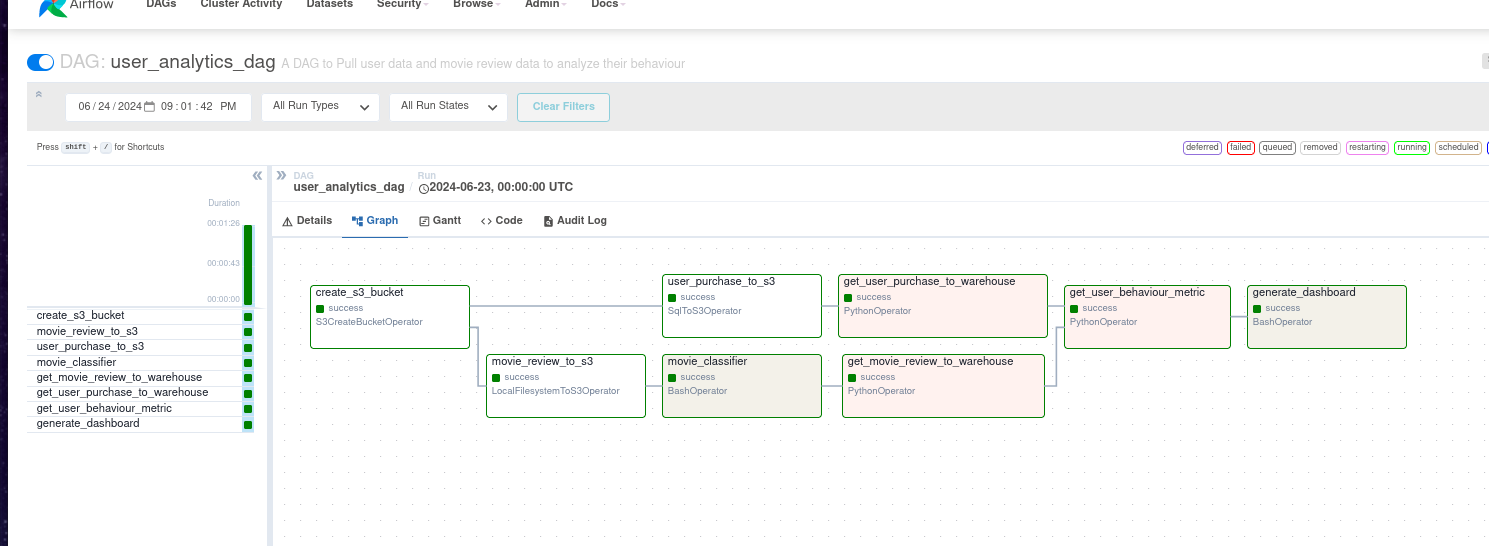

The user_analytics_dag DAG in the Airflow UI

will look like the below image:

On completion, you can see the dashboard html rendered at dags/scripts/dashboard/dashboard.html .

Read **this post **for information on setting up CI/CD, IAC(terraform), “make” commands, and automated testing.

5. Code walkthrough

The data for user_behavior_metric is generated from 2 primary datasets. We will examine how each is ingested, transformed, and used to get the data for the final table.

5.1 Extract user data and movie reviews

We extract data from two sources.

User purchase informationfrom a csv file. We useLocalFileSystemToS3Operatorfor this extraction.Movie reviewdata containing information about how a user reviewed a movie from a Postgres database. We useSqlToS3Operatorfor this extraction.

We use Airflow’s native operators to extract data as shown below (code link ):

movie_review_to_s3 = LocalFilesystemToS3Operator(

task_id="movie_review_to_s3",

filename="/opt/airflow/data/movie_review.csv",

dest_key="raw/movie_review.csv",

dest_bucket=user_analytics_bucket,

replace=True,

)

user_purchase_to_s3 = SqlToS3Operator(

task_id="user_purchase_to_s3",

sql_conn_id="postgres_default",

query="select * from retail.user_purchase",

s3_bucket=user_analytics_bucket,

s3_key="raw/user_purchase/user_purchase.csv",

replace=True,

)

5.2. Classify movie reviews and create user metrics

We use Spark to classify reviews as positive or negative, and ML and DuckDB are used to generate metrics with SQL.

The code is shown below (code link ):

movie_classifier = BashOperator(

task_id="movie_classifier",

bash_command="python /opt/airflow/dags/scripts/spark/random_text_classification.py",

)

get_movie_review_to_warehouse = PythonOperator(

task_id="get_movie_review_to_warehouse",

python_callable=get_s3_folder,

op_kwargs={"s3_bucket": "user-analytics", "s3_folder": "clean/movie_review"},

)

get_user_purchase_to_warehouse = PythonOperator(

task_id="get_user_purchase_to_warehouse",

python_callable=get_s3_folder,

op_kwargs={"s3_bucket": "user-analytics", "s3_folder": "raw/user_purchase"},

)

def create_user_behaviour_metric():

q = """

with up as (

select

*

from

'/opt/airflow/temp/s3folder/raw/user_purchase/user_purchase.csv'

),

mr as (

select

*

from

'/opt/airflow/temp/s3folder/clean/movie_review/*.parquet'

)

select

up.customer_id,

sum(up.quantity * up.unit_price) as amount_spent,

sum(

case when mr.positive_review then 1 else 0 end

) as num_positive_reviews,

count(mr.cid) as num_reviews

from

up

join mr on up.customer_id = mr.cid

group by

up.customer_id

"""

duckdb.sql(q).write_csv("/opt/airflow/data/behaviour_metrics.csv")

We use a naive Spark ML implementation(random_text_classification ) to classify reviews.

5.3. Load data into the destination

We store the calculated user behavior metrics at /opt/airflow/data/behaviour_metrics.csv. The data stored as csv is used by downstream consumers, such as our dashboard generator

.

5.4. See how the data is distributed

We create visualizations using a tool called quarto, which enables us to write Python code to generate HTML files that display the charts.

We use Airflow’s BashOperator to create our dashboard, as shown below:

markdown_path = "/opt/airflow/dags/scripts/dashboard/"

q_cmd = f"cd {markdown_path} && quarto render {markdown_path}/dashboard.qmd"

gen_dashboard = BashOperator(task_id="generate_dashboard", bash_command=q_cmd)

You can modify the code to generate dashboards.

6. Design considerations

Read the following articles before answering these design considerations.

Now that you have the data pipeline running successfully, it’s time to review some design choices.

-

Idempotent data pipeline

Check that all the tasks are idempotent. If you re-run a partially run or a failed task, your output should not be any different than if the task had run successfully. Read this article to understand idempotency in detail .

Hint, there is at least one non-idempotent task. -

Monitoring & Alerting

The data pipeline can be monitored from the Airflow UI. Spark can be monitored via the Spark UI. We do not have any alerts for task failures, data quality issues, hanging tasks, etc. In real projects, there is usually a monitoring and alerting system. Some common systems used for monitoring and alerting are

cloud watch,datadog, ornewrelic. -

Quality control

We do not check for data quality in this data pipeline. We can set up basic count, standard deviation, etc, checks before we load data into the final table for advanced testing requirements consider using a data quality framework, such as great_expectations . For a lightweight solution take a look at this template that uses the cuallee library for data quality checks.

-

Concurrent runs

If you have to re-run the pipeline for the past three months, it would be ideal to have them run concurrently and not sequentially. We can set levels of concurrency as shown here . Even with an appropriate concurrency setting, our data pipeline has one task that is blocking. Figuring out the blocking task is left as an exercise for the reader.

-

Changing DAG frequency

What changes are necessary to run this DAG every hour? How will the table schema need to change to support this? Do you have to rename the DAG?

-

Backfills

Assume you have a logic change in the movie classification script. You want this change to be applied to the past three weeks. If you want to re-run the data pipeline for the past three weeks, will you

re-run the entire DAG or only parts of it. Why would you choose one option over the other? You can use this command to run backfills. -

Data size

Will the data pipeline run successfully if your data size increases by 10x, 100x, or 1000x why? Why not? Please read - how to scale data pipelines on how to do this.

7. Next steps

If you want to work more with this data pipeline, please consider contributing to the following.

- Unit tests, DAG run tests, and integration tests.

- Add CI/CD steps following the data engineering template

If you have other ideas, please feel free to open Github issues or pull requests .

8. Conclusion

This article gives you a good idea of how to design and build an end-to-end data pipeline. To recap, we saw:

- Infrastructure setup

- Data pipeline best practices

- Design considerations

- Next steps

Please leave any questions or comments in the comment section below.

9. Further reading

- Airflow pros and cons

- What is a data warehouse

- What and why staging?

- Beginner DE project - Stream edition

- How to submit spark EMR jobs from Airflow

- DE project to impress hiring manager

Hi,

An error occurred (IllegalLocationConstraintException) when calling the CreateBucket operation: The unspecified location constraint is incompatible for the region specific endpoint this request was sent to. You can fix this by editing the file setup_infra.sh at line 26: adding argument. I fix that using command likeaws s3api create-bucket --bucket my-bucket-name --region us-west-2 --create-bucket-configuration LocationConstraint=us-west-23)in linux get lot of permission issues so give permission tochmod -R 777 /optchmod -R 777 ./logschmod -R 777 ./datachmod -R 777 ./tempThank you Ghanshyam kolse

docker composehttps://docs.docker.com/compose/cli-command/ as part of docker v2.2&3. I'll open a Github issue. https://github.com/josephmachado/beginner_de_project/issues/11#issue-1034176386

Just want to say thank you. This is an awesome tutorial!

hello, the link in this line is not working: "For simplicity, services 1-5 of the above are installed and run in one container defined here". As a result, the project is unable to proceed. Please fix the link, thank you!

Fixed, Thank you :)

In the AWS components setup, I'm on the very last step for Redshift- testing my connection using pgcli.

I got an error “Operation timed out.” I deleted the instance of redshift, re-did my IAM settings and then created another instance of redshift, but still got the same error. Note that I modified public access to yes. There was a popup asking if i wanted to enter an elastic IP address, which I kept as the default value None.

Note that I had no issue with pgcli with regards to airflow, unlike some of the commentators above, just with redshift. And I had no issues with EMR either.

Here is the screenshot of the error, thanks in advance:

https://uploads.disquscdn.c...

Hi @disqus_60LFr6SW35:disqus this is sometimes caused if we don't set public access to yes when creating a Redshift instance. LMK if this helps, if not we can discuss further.

Hi Joseph- wanted to follow up on this. Tried my best to read through AWS documentation, but no luck on this issue.

hi @disqus_60LFr6SW35:disqus Its really tough to guess the reason without actually being able to debug using the UI. Can you make sure you are using the same region as your EMR cluster? You should see this in the top right part of your aws page.

I figured it would be tough, thanks for trying anyway! The EMR cluster is in the same region.

Ok, after hours and hours, I finally got redshift to work. Maybe this will help someone else as well.

Within VPC-> security groups -> default group...

I edited the inbound rule. The first rule in the screenshot was provided, the second rule i added, I changed the IP address to 'anywhere', same as first rule, but the IP address default changed to '::/0', it was originally '0.0.0.0/0'.

https://uploads.disquscdn.c...

Hi Joseph,

Thanks for your assistance! I did modify public access to yes, but I left the elastic IP address as 'None'. From what I can tell this should be ok.

Hi Joseph, I am following your tutorial post and the DAG was functioning properly until end of Stage 1. I am unable to complete Stage 1 as Airflow is displaying the following error:

"Broken DAG: [/usr/local/airflow/dags/user_behaviour.py] No module named 'botocore' "

Docker is also generating the same error:

[2021-06-05 00:25:23,005] {{init.py:51}} INFO - Using executor LocalExecutor [2021-06-05 00:25:23,005] {{dagbag.py:403}} INFO - Filling up the DagBag from /usr/local/airflow/dags [2021-06-05 00:25:23,041] {{dagbag.py:246}} ERROR - Failed to import: /usr/local/airflow/dags/user_behaviour.py Traceback (most recent call last): File "/usr/local/lib/python3.7/site-packages/airflow/models/dagbag.py", line 243, in process_file m = imp.load_source(mod_name, filepath) File "/usr/local/lib/python3.7/imp.py", line 171, in load_source module = _load(spec) File "", line 696, in _load File "", line 677, in _load_unlocked File "", line 728, in exec_module File "", line 219, in _call_with_frames_removed File "/usr/local/airflow/dags/user_behaviour.py", line 8, in from airflow.hooks.S3_hook import S3Hook File "/usr/local/lib/python3.7/site-packages/airflow/hooks/S3_hook.py", line 19, in from botocore.exceptions import ClientError ModuleNotFoundError: No module named 'botocore'

I've tried pip install boto3 and pip install botocore in windows cmd and issue persists. I've also tried restarting the steps from the beginning and I still receive the same error.

My CeleryExecutor.yml file has the following configuration:

worker: image: puckel/docker-airflow:1.10.9 restart: always depends_on: - scheduler volumes: - ./dags:/usr/local/airflow/dags - ./requirements.txt:/requirements.txt # Uncomment to include custom plugins # - ./plugins:/usr/local/airflow/plugins environment: - FERNET_KEY=46BKJoQYlPPOexq0OhDZnIlNepKFf87WFwLbfzqDDho= - EXECUTOR=Celery # - POSTGRES_USER=airflow # - POSTGRES_PASSWORD=airflow # - POSTGRES_DB=airflow # - REDIS_PASSWORD=redispass command: worker

My LocalExecutor.yml file has the following configuration:

volumes: - ./dags:/usr/local/airflow/dags # - ./plugins:/usr/local/airflow/plugins # for linux based systems - ./requirements.txt:/requirements.txt - ~/.aws:/usr/local/airflow/.aws/ - ./temp:/temp - ./setup/raw_input_data:/data

My requirements.txt file includes the following:

boto3===1.17.88 botocore===1.20.88 paramiko===2.4.2

I hope this information will help identify what is missing from proceeding from Stage 1 to Stage 2. Please advise.

Hi Vithuren, I have made some changes. One of them is introducing an easy infra setup script. Please try the new setup script and let me know if you are still having issues.

Thank you Joseph! That worked like a charm. Really appreciate your effort in helping everyone learn data engineering from scratch. Looking forward to learning more through your channel and posts as well as applying the context in the real world.

Hi Joseph,

Thank you for setting this up! I have a question on loading data into PG. In the copy command, I don't know why the OnlineRetail.csv is in data folder ('/data/retail/OnlineRetail.csv')? I thought it is stored locally on /setup/raw_input/retail...

(after 27 hours ...) Ahhh, I realize that in the yaml file, /data from the container is mounted to the local folder ./setup/raw_input_data. Is that the right understanding?

Thank you, Renee

You are right Renee. Since we load it into the postgres db, we mount it to that docker to do a

COPY retail.user_purchase FROM ...Hey Joseph! Amazing work, thanks for the content. I'm a beginner, with minimal AWS knowledge and haven't used Airflow before. I was going through the instructions and was not able figure out how and where to run the tasks mentioned from section 5.1. Could you please provide some context into this for newbies? Thanks again!

Hey, Thank you. So the sections 5.1 to 5.3 explain how we are generating the required table. At the end of 5.4 you will see

When you log into Airflow, switch on the DAG and it will run. Hope this helps. LMK if you have more questions.

When I am running 'python user_behavior.py' on the conmmand line, it asks me to install all those packages within the script...I am having trouble finding the matching versions of all the dependencies. Is this the right way to go? Like installing all those dependencies locally?

I've installed airflow using 'pip install apache-airflow', but when I ran ''python3 user_behavior.py'' it still says 'dags/user_behavior.py:4 DeprecationWarning: This module is deprecated. Please use `airflow.operators.dummy'.

I changed it to 'from airflow.operators.dummy import DummyOperator', run it, but the Airflow UI throws this error 'Broken DAG: [/usr/local/airflow/dags/user_behavior.py] No module named 'airflow.operators.dummy'. I have no idea why this is happening...

Is it due to the airflow docker image version? That's the only thing I can think of ...

I tried 'docker exec -ti b4a83183a7fc /bin/bash' then looked at the dags folder that contains 'user_behavior.py' file. Everything is there...

Really need some help from here! Thank you Joseph!

(I ended up using a new docker airflow image and the dag works now) But still would like to solve the myth.

Hi Renee, If you want to run the script, you have to bash into the docker container running airflow. You have the right command with the

docker exec...., once you are inside the container try running theuser_behavior.pyscript it should not fail. We are using docker to avoid having to install airflow locally. let me know if you have more questions.Thanks for the great post ! Could you share your thoughts on the questions you raised in Design Considerations section? Thank you again!

Hi, Thank you for the kind words. I will release a post with details on this soon.

Hi, thanks for this post. Can you emphasize on the design considerations? Would love to see your ideas about the questions you raised in the section. Also, about the #4 - concurrent runs, is it the pulling of the data that is not idempotent, as it fetches different data every time we run ? Your answers will certainly help understand the considerations much better. Thank you again!

Hi Joseph! I'm running into an issue while creating EMR cluster. I've tried 3 different times with no success :(

So I'd like to know how much time did you take to create a cluster and see the 'waiting' status, and same question when terminating which also takes like forever... Any idea why this is happening? (Checked region, EC2 instances, your configuration above and all looks good) -- Highly appreciate your help on this!

Hi Liliana, The EMR clusters usually take 5-10 min (in us-east-1 region) to start and once the steps are complete it will enter terminating state (i think for about 5min) before stopping.

Hi Joseph! I'm running into an issue while creating EMR cluster. I've tried 3 different times with no success :(

So I'd like to know how much time did you take to create a cluster and see the 'waiting' status, and same question when terminating which also takes like forever... Any idea why this is happening? (Checked region, EC2 instances, your configuration above and all looks good) -- Highly appreciate your help on this!

I am having a problem when running the command:

pgcli -h localhost -p 5432 -U airflow -d airflow

I used the password airflow

however, the terminal keeps telling me

password authentication failed for user "airflow"

Any ideas what is happening?

hi @disqus_0O3FoXQB4x:disqus could you try connecting without the -d flag.

pgcli -h localhost -p 5432 -U airflow

Hi Joseph,

Thanks for making this project. I am in the final stage of setting up Redshift. I have created the cluster and am testing the connection using pgcli -h <your-redshift-host> -U <your-user-name> -p 5439 -d <yourredshift-database>. However, my cmd prompt keeps returning the following errors:

could not send data to server: Socket is not connected (0x00002749/10057)

could not send SSL negotiation packet: Socket is not connected (0x00002749/10057)

Maybe I'm copying in the wrong info for the host name? I'm using host = redshift-cluster-1.<somecode>.<location>.redshift.amazonaws.com

https://uploads.disquscdn.c... Hi, I had setup the docker container and created the retail schema and created the table. I am not able to copy the data though sir. It says could not open the file "[File location]" for reading": No such file or directory. Could you help me here. Thanks. I have attached a screenshot of my error.

I am not sure if the Docker container is not able to locate the location of my CSV file or should I be creating a volume mount seperately so that Docker can access the location.

I have solved it. I realized my mistake

Hi! First of all, thanks for making this -- it's exactly what I needed to get started with Data Engineering.

I am having an issue connecting to the Postgres database. When I attempt to run the command:

pgcli -h localhost -p 5432 -U airflowI am receiving the following error: https://uploads.disquscdn.c...

I tried downloading a postgres gui and was receiving a similar error. Any insight into why this is?

Please note that I am new to using the command line interface, however I did download pgcli from https://www.pgcli.com/.

After debugging, we hypothesize this happens when docker is not setup properly on windows, ref: https://docs.docker.com/too...

Hi Joseph I am getting an issue. I believe this is related to communication with Redshift. python

~/de/projects/beginner_de_project master ± ./setup_infra.sh sde-ashish-test-1 ap-south-1 Waiting for Redshift cluster sde-batch-de-project-test-1 to start, sleeping for 60s before next check Running setup script on redshift psql: error: could not connect to server: Connection timed out Is the server running on host "sde-batch-de-project-test-1.clausyiwjagm.ap-south-1.redshift.amazonaws.com" (3.111.4.7) and accepting TCP/IP connections on port 5439?Hello, Can you try logging in to your AWS account and checking if the Redshift cluster had been setup via the UI ?

I have faced same problem above. When I check my Redshift cluster, I can see my project.

I have solved this problem, follow the steps:

1- Sign-in into AWS Console 2- Search in AWS console for 'Security groups' - You will get 'Security groups (ECS feature)' 3- Select Inbound rules tab < select edit inbound rules (Add Rule: Type=Redshift, Source=MyIP. (auto-fill), Save it.) 4- Also In Redshift- Open your Cluster < Actions < Modify publically accessible setting < Enable < Save Changes

Thank you dude you just saved my life I've been tryna figure this out all day today and it finally worked!

Hi Joseph,

I have been running airflow for each new task, i.e. I ran it first with just the DummyOperator 'end of pipeline'.

Then I re-started airflow, and ran it with pg_unload and end_of_pipeline.

Then, I restarted airflow again and ran it with ... you get the idea.

I don't want to restart airflow each time. When I update user_behavior.py ,the code in airflow is updated, but the new tasks are shown as 'skipped', and even when I refresh airflow, and turn off/turn on the DAG, it won't run the new tasks. Any workarounds? Thank you!

@disqus_60LFr6SW35:disqus the tasks are shown as skipped because the DAG is already complete for that run. I recommend clicking on the first task in the DAG and pressing clear, you will be prompted and asked if you want to clear all dependent tasks, press ok. This should allow you to rerun your DAG. LMK if this helps.

Hi, Joseph, I just want to say thank you so much for creating this project/template, I have learned quite a lot!

I have encountered a few issues when setting up this project, I wish to share it with you and who has just started the project.

AWS has updated quite a few security settings, before you run the IAC, make sure you modify/add followings settings

I'm getting the following error : psql: error: connection to server at "sde-batch-de-project.cru2arsugv9j.us-east-1.redshift.amazonaws.com" (34.236.124.58), port 5439 failed: Operation timed out Is the server running on that host and accepting TCP/IP connections?

My Redshift and S3 are showing in my AWS account

Can you check 2 things

psql -f ./redshift_setup.sql postgres://$REDSHIFT_USER:$REDSHIFT_PASSWORD@$REDSHIFT_HOST:$REDSHIFT_PORT/dev

[deleted]

Hi Rim, I updated the project with new setup script, that should make this seamless. If you are still facing issues could you please attach a screen shot or the exact error or open a github issue here https://github.com/josephmachado/beginner_de_project/issues I'd be happy to take a look.

Hi , I am new in AWS and I am getting following errors. It seems it has to do with permissions.

ngoma@ngomix:~/beginner_de_project$ sudo ./tear_down_infra.sh project1

Deleting bucket project1 and its contents fatal error: An error occurred (AccessDenied) when calling the ListObjectsV2 operation: Access Denied

An error occurred (AccessDenied) when calling the DeleteBucket operation: Access Denied ngoma@ngomix:~/beginner_de_project$ sudo ./setup_infra.sh project1 Creating bucket project1

An error occurred (BucketAlreadyExists) when calling the CreateBucket operation: The requested bucket name is not available. The bucket namespace is shared by all users of the system. Please select a different name and try again. Clean up stale local data Download data download: s3://start-data-engg/data.zip to ./data.zip Archive: data.zip creating: data/ .......

Hi, The AWS S3 bucket names need to be unique So that might have to do with the issue with creating Bucket.

Terraform is running fine, but connections and variables are not reflected in Airflow UI ? are they not getting defined at the ec2 launch within the user data script?

Ohh, man, thanks for this great job! <3

Hi Joseph, all the instances appear in AWS but I'm getting the following error in airflow. Any idea of what may be causing this?

Broken DAG: [/opt/airflow/dags/user_behaviour.py] Traceback (most recent call last): File "/opt/airflow/dags/user_behaviour.py", line 17, in EMR_ID = Variable.get("EMR_ID") File "/home/airflow/.local/lib/python3.6/site-packages/airflow/models/variable.py", line 140, in get raise KeyError(f'Variable {key} does not exist') KeyError: 'Variable EMR_ID does not exist'

Hello, This happens because the EMR_ID variable was not set properly. Can you paste a screen shot of the logs you get when you run setup_infra.sh ? They probably have the errors logged out.

Hey Joseph, Hope you're well and safe

When you're planning to post streaming data processing pipeline

hi @disqus_bltTwti6Ul:disqus I am working on it. Coming soon

hi @disqus_bltTwti6Ul:disqus streaming version is up https://www.startdataengine...

Hi,

I was having issues with connecting to the database however I realized I could post my query here. In particular, running the following command:

pgcli -h localhost -p 5432 -U airflow -d airflow

results in the following error:

FATAL: role "airflow" does not exist

I have installed pgcli, Postgresql as well as apache-airflow and being quite new to this I am unsure as to how I should proceed.

Thank you

Hi @disqus_tU1AeKxnhK:disqus I recommend checking the following

docker-compose -f docker-compose-LocalExecutor.yml up -din the terminal, in the directory where you cloned the repo?docker pson your terminal to see if you have the airflow and postgres containers running?Let me know what you get from the above, and I can help you debug this issue.

Hi Joseph,

Thanks for you reply. I did run the docker-compose command and after running docker ps I get the following:

https://uploads.disquscdn.c...

yes absolutely, I see your containers are running correctly.

lsof -n -i:5432 | grep LISTENcommand, If yes you will need to stop that usingpg-stopdocker exec -ti b4a83183a7fc /bin/bashthis will take you inside the container. Within the container you can trypsql -U airflow.I tried running the command on the second step and get the following error:

https://uploads.disquscdn.c...

, did you get a chance to try options 1 and 3 from my latest comment ? I am trying to determine if it is an issue with docker ports not being open(have seen this issue with windows home users)

When I tried running the commands from option 1, i received errors saying the command is not found. I also don't believe postgres was running previously. I'm also not too sure what you mean by option 3, but I tried typing localhost:8080 into my browser and I do see an admin page from airflow if that is what you meant?

I see, yes that is what I meant by option 3, The admin page you see on the UI actually reads data from the airflow database, so it seems like that is working. in your pgcli command are you using airflow for the password on the terminal prompt?

I ran "pgcli -h localhost -p 5432 -U airflow -d airflow", but there was no prompt to enter a password as I got the error message "FATAL: role "airflow" does not exist"

I see, can you try "docker-compose -f docker-compose-LocalExecutor.yml down" and then "docker-compose -f docker-compose-LocalExecutor.yml up -d" again? I am not really sure why this is happening.

I tried that but still getting the same error. Thanks for you help anyways

I've run the command from the first from my machine ( the host ), but then I figured out that I need to connect to the docker container and execute the command inside it coz all installations are inside are inside the container. if you're in the same situation as me try to connect to the docker container and then execute the command :

Hi Joseph,

On the very last job for stage 2, 'clean_movie_review_data', I get an error in airflow saying 'EMR job failed'.

Any advice on how to proceed? Thank you!

Full airflow log:

https://www.evernote.com/l/...

hi @disqus_60LFr6SW35:disqus this is an issue with the EMR step not running properly. Can you paste the templated Steps here? My guess is that it's missing a variable. or the data/script was not copied over to S3.

The steps are in clean_movie_review.json file correct? I git cloned from this repo https://github.com/josephma...

and git status shows my file has not changed since.

S3 shows the movie_review.csv file and random_text_classifer.py, so I think all the files were copied over correctly.

I see, I recommend 2 things(in the following order)

Thank you, these instructions helped me resolve the issue.

Great @disqus_60LFr6SW35:disqus

Hi Joseph,

Thank you very much for this post! I am following along the stage 1. I am confused with the mechanism of S3. How does the dag knows to send the data to my own S3 bucket? I don't quite understand the key used in the script? I thought it should be some keys that is related to my own S3 bucket.

Thank you!

@reneesliu:disqus you are welcome.

I am confused with the mechanism of S3. How does the dag knows to send the data to my own S3 bucket? => If you notice in the code https://github.com/josephma... you will need to replace this with your S3 bucket name. Airflow uses boto3 which is an AWS library to connect to your AWS account. When you configure your AWS cli locally it creates a file in you home directory called config. In the docker compose file you can see https://github.com/josephma... that the local .aws folder contents are copied over into the docker containers aws folder. Boto3 within Airflow( see s3_hook here http://airflow.apache.org/d... ) will look at this folder to get permission to your AWS account.

I don't quite understand the key used in the script? => you can think of the key as the folder structure within you S3 bucket. for eg if your s3 bucket is named 'my-s3-bkt' and key is movie_review/load/movie.csv, then it refers to the file at s3://my-s3-bkt/movie_review/load.movie.csv

hope this helps. LMK if you have any more questions.

Hi Joseph,

I see that in the latest version you don't copy over the .aws folder contents over into the docker containers aws folder. How does airflow know to communicate with aws then?

Hi, So we use Airflow environment variables to enable Airflow to communicate with AWS services we use.

We follow Airflows naming convention which enables Airflow to read these environment variables as connections or variables

Hope this helps. LMK if you have any questions

Hi Joseph,

Thank you for the prompt response. I'm still a bit confused as to where the credentials like aws_access_key_id, aws_secret_key_id, and region_name are stored in airflow and when they were initialized in the tutorial.

Hi, We don't need to store them since all our Airflow operators use connection IDs (from env variables) to connect to AWS services.

How are we able to use S3Hook() if we don't have the aws_default credentials?

Hi, We set the AWS CONN ID as an environment variable https://github.com/josephmachado/beginner_de_project/blob/09bfcaf87834b499393592e120790db782baa38c/docker-compose.yaml#L54, airlfow will use this when a default credential is unvailable. Please LMK if you have more questions.

Thank you very much for your explanation.

https://uploads.disquscdn.c...

I'm getting below error while copying csv data into table. please help

hi @disqus_bltTwti6Ul:disqus it looks like you are running the command within your airflow docker container. Exit from the airflow docker container using exit command and then

pgcli -h localhost -p 5432 -U airflowLet me know if that works for you

https://uploads.disquscdn.c...

I see while connecting via pgcli its prompted for password. I dont have any idea which password is require for this

@disqus_bltTwti6Ul:disqus the password is airflow (I know not very secure, but its a toy example). This information is in the stage 1 section of this blog post.

Joseph if you dont mind could you please connect to this meeting..meet.google.com/kzz-tdpc-xti

@disqus_bltTwti6Ul:disqus Unfortunately I can't, Please post any questions here I will try to help.

Hi Joseph,

I am creating the AWS components, EMR cluster but i am unable to create it, throws error and terminates. Error is:

Terminated with errors. The requested number of spot instances exceeds your limit.

Can you please help resolve this.?

Thanks!

Hi @disqus_TnfcZmjZc2:disqus This is caused because of your aws account not having ability to create the required number of EC2 instances required for the EMR cluster.

You can go to aws services > s3 > Limits. In the search bar type in On-Demand and you will see the EC2 instance type limits of your account. After this you can click on

AWS Service Quotas(or type in the services search bar) where you can request an increase.Hope this helps. LMK if you have any questions.

Hi Joseph,

Thank you for your reply. I have increased my spot instances limit but I am still unable to create clusters. This time the error is "Terminated with errors Provisioning for resource group timed out".

Can you please help me with this?

Thanks,

Nidhi

Hey Joseph,

I'm getting the following error when running

make infra-upError: could not start transaction: dial tcp xx.xxx.xxx.xxx:5439: connect: operation timed out │ │ with redshift_schema.external_from_glue_data_catalog, │ on main.tf line 170, in resource "redshift_schema" "external_from_glue_data_catalog": │ 170: resource "redshift_schema" "external_from_glue_data_catalog" {I've confirmed my Redshift cluster is publicly accessible and that my security group is accepting inbound / outbound traffic

Hi, That's weird, Can you check the region for the Redshift cluster? is it us-east-1, if not can you set your region as the default here (https://github.com/josephmachado/beginner_de_project/blob/master/terraform/variable.tf) and try again?

Yeah, its set to us-east-1, availability zone us-east-1e.

Not sure what the issue may be. I am using a different profile from default (which is already in use) that I changed in main.tf and I had to create an EMR_DefaultRole to get through the following error

EMR service role arn:aws:iam::xxxxxxxx:role/EMR_DefaultRole is invalid. Otherwise I've followed your instructions as you laid out.Hmm, I see. My guess is that the cluster is created using a profile and the schema is (tried) being created with another profile causing the issue. Would it be possible to try the entire infra with your default schema? If that works we can infer that the non default profile is the one causing the issue.

I faced a similar issue, and finally solved it by modifying the security group assigned to my redshift cluster using this approach : https://stackoverflow.com/questions/64782710/redshift-odbc-driver-test-fails-is-the-server-running-on-host-and-accepting-tcp#:~:text=Alternatively%2C%20you%20can%20modify%20the%20source%20of%20the%20inbound%20rule%20in%20the%20SG%20to%20be%20your%20home/work%20ip%20address/range%2C

Hi,

I ran into the same issue when trying to run

make infra-up. Did you ever figure out how to resolve the issue? p.s.: I also verified that my Redshift cluster is publicly accessible and that my sec. group is accepting inbound / outbound traffic.Hi, Could you please open an issue here https://github.com/josephmachado/beginner_de_project/issues with the requested details. I can take a look at this.

Hi Joseph,

My mistake - there was something wrong on my end and I was able to fix the issue.

Is there a way I could set this pipeline to use less resources? $0.46 an hour could be intimidating as something intended for practice

Hi Leonel, You can try changing the redshift and emr instance types Redshift node type, EMR node type to something cheaper. When I set this up, these were the minimal ones that worked for me.

Alternatively you can run them locally, by setting up EMR docker as shown here, and use a postgres docker for redshift.

Hope this gives you some ideas. LMK if you have more questions.

Hi, first of all, thanks for the content. I've been reading your blog everyday and it has been sooo helpful. I am trying to understand one thing about this project. Where is the pyspark (and consequently the java and spark) being installed in the docker since there isnt a dockerfile ? One other thing, could i have a container for airflow and a container for the python environment ?

Hi,

Thank you for the kind words & glad that the blog has been helpful. When running locally (using docker compose) there is no spark.

When we setup the infrastructure we create an AWS EMR cluster (which is managed spark and HDFS) where our spark code rung.

Yes you can have separate containers for Airflow and python, but you would still need Airflow installed to run the DAG, so it would be better to just use the Airflow container. hope this helps. LMK if you have more questions.

how i get movie review file and user purchase data to practice?

Hi Cương Nguyễn Ngọc, The movie review file and user purchase data are on a public S3. They can be downloaded as shown below

wget https://start-data-engg.s3.amazonaws.com/data.zip && sudo unzip data.ziphttps://github.com/josephmachado/beginner_de_project/blob/a3a4e5b178164aa06bf6349a77cf73cb86e7f777/setup_infra.sh#L107-L109

thanks for your help ^^

Hi,

In Airflow, under Admin -> Connections, are we supposed to already have the 3 list connections or are those supposed to be filled in manually?

Thanks for this awesome guide! When I run 'make ci', I get the following error:

docker exec -ti webserver isort . OCI runtime exec failed: exec failed: unable to start container process: exec: "isort": executable file not found in $PATH: unknown make: *** [isort] Error 126

Would you have any suggestions on how to troubleshoot this?

Can you do

make upfirst and try it again?I had the same issue. It was just because I didn't have isort installed.

Hi,

Has anyone also run into this issue when running make infra-up? If so, could you please share what you did to resolve the issue.

Error: could not start transaction: dial tcp xx.xxx.xxx.xx:5439: connect: operation timed out │ │ with redshift_schema.external_from_glue_data_catalog, │ on main.tf line 170, in resource "redshift_schema" "external_from_glue_data_catalog": │ 170: resource "redshift_schema" "external_from_glue_data_catalog" {

I got this issue too and I solved it by editing the Source of the Inbound Rules of the EC2 Default Security Group (SG) to my IP Address. Just get into EC2 Dashboard via AWS Console and the list of SG is under the Network & Security tab. There is multiple SG and you have to edit the 'default' EC2 SG only.

Awesome, thank you! Everything is working perfectly now.

Thanks for providing this information! Was wondering if you could provide some more context on how you set up your docker instances. I know these are already set up in your repo, but I am hoping to understand better for future projects. Reading through the airflow docker setup reference detailed how to get the docker-compose yaml file with:

curl -LfO 'https://airflow.apache.org/docs/apache-airflow/2.3.3/docker-compose.yaml'

This seems to be different from your docker-compose.yaml file though. Did you use a custom docker image? Additionally, did you write your own Makefile, or is that a standard Makefile used in projects? Do you have any documentation on it? Thanks again!

Hi @Dave, So that curl command copies Airflows docker compose file which uses CeleryExecutor and this comes with a lot of extra services like celery, worker containers etc. For our local dev I chose to use

LocalExecutor9see this line https://github.com/josephmachado/beginner_de_project/blob/a3a4e5b178164aa06bf6349a77cf73cb86e7f777/docker-compose.yaml#L45) since that is the simplest to set up locally.In production people generally use the executor that fits their DAg workloads. Hope this helps. LMK if you have any questions.

For different types of executors you can check this out https://airflow.apache.org/docs/apache-airflow/stable/executor/index.html

Hi,

Does anyone else not see the

list connectionandlist variableentries in Airflow? How is Airflow able to communicate with AWS services without these? Can someone please point out to me where I can find these entries + where they are stored?Hi, Apologies that this is not super clear. The connection and variable are now set as environment variables via the docer set up. See here we follow the Airflow env variables naming conventions to get this to work.

Once set up Airflow will use the env variables. Airflow will first check in the connections and variables page, if not found, it'll check the environment variables. Hope this helps. LMK if you have any questions.

is that params={"user_purchase": "/temp/user_purchase.csv"} in PostgresOperator to save file csv that extract from database. and where is that file save? is that csv in woker node of airflow? thank you for your help

Hi, Yes that param is substituted at runtime in this query https://github.com/josephmachado/beginner_de_project/blob/a3a4e5b178164aa06bf6349a77cf73cb86e7f777/dags/scripts/sql/unload_user_purchase.sql#L1-L11. It will unload the data into the `/temp/user_purchase.csv' location in the postgres container. The postgres container has its temp directory synced with the local temp directory (see this: https://github.com/josephmachado/beginner_de_project/blob/a3a4e5b178164aa06bf6349a77cf73cb86e7f777/docker-compose.yaml#L77) which in turn is synced with the airflow scheduler (https://github.com/josephmachado/beginner_de_project/blob/a3a4e5b178164aa06bf6349a77cf73cb86e7f777/docker-compose.yaml#L61).

The csv is not in the airflow scheduler. Since we are running in

LocalExecutormode the workers are just python process in the same container.Hope this helps.

Hey Joseph, fantastic work and really appreciate these guides! I'm currently having some trouble right off the bat, when I try to run the first line of code "./setup_infra.sh {your-bucket-name}". I received a number of errors like:

I know this is an older post, but are there some other configurations that need to be adjusted before starting this?

Hey, So the access denied usually happens when your aws cli is not configured correctly. Could you please run

aws s3 lsand tell me what you get?As for the group id can you please paste a screenshot of the error messages? I can take a look.

Hello! I have 2 questions, 1st question: what approach do you believe is best to learn what you have here? the code is here, even the infrastructure and set up is 1 script away, I feel I do not have the opportunity to learn if I follow along because everything is already laid out or?

2nd question: as I was trying to follow on GCP (no time to learn aws rn), since I do not use the script to set up the infrastructure and have to do it myself, I could not understand whether the local postgres is actually local as in on my local machine, or on the compute node. I guess on the compute node because the docker-compose file has both postgres & airflow?

Extra: I believe teaching us how to build a set-up infra like you have would be fantastic. I usually use terraform, and my scripting is quite poor, but I try to learn from your work. really amazing.

Hey,

what approach do you believe is best to learn what you have here? => IMO the best way is to try out this project and get it working end to end. Then choose a dataset that you are interested in and replicate what you learnt here from scratch.

Ah yes the infra is a bit confusing. All the containers specified in the docker-compose.yml file (including postgres) is run on the EC2 instance.

Teaching us how to build a set-up infra like you have would be fantastic. => 100% agreed, I am working on this, and making the setup easier to understand and follow.

Hello,

I'm very new to this. I'm on windows 11 and created aws account and installed aws cli. Trying to set it up using aws. Sorry if it is already answered. Where do I run git clone command? Do I run it on my local machine opening cmd? it says git is not recognized. Where do I run step 4.3 make down and call .sh script? Please help, thanks in advance.

Hello,

Hope this helps. LMK if you have any questions.

psql: error: connection to server at "sde-batch-de-project.cryjgtelr1px.us-east-1.redshift.amazonaws.com"(54.211.97.226), port 5439 failed:Operation timed outIs the server running on that host and accepting TCP/IP connectionsThis is the only error I'm left with after several hours of running the set up and tear down scripts and debugging. Any suggestions on what to do? I got airflow and metabase working.

Hi Joseph,

In main.tf I see that we set AIRFLOW_CONN_AWS_DEFAULT=aws://?region_name=${var.aws_region}, but when and where is the AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY being set. Where is this set up when running: make infra-up.

Hi, The aws_region is set in the variable.tf file here

As for aws access and secret key, the terraform init will establish that by looking for the aws config files that come with your AWS CLI. There are other ways to let terraform use AWS account as shown here Hope this helps.

awesome, thank you!

Hello! Thanks for creating this project. I am learning a lot from it. Anyways, I was trying to follow through with the project and struggled to get some parts working properly. Thought to share how I managed to resolve them.

minor. To get airflow running in apple silicon, add --platform=linux/arm64 to the FROM statement in the docker file found in containers/airflow

SSO: The issues are most likely because I am using AWS SSO, with another profile instead of a default one. I ran into a lot of issues with AWS because I was starting from scratch and trying to implement SSO. From https://github.com/hashicorp/terraform/issues/32448#issuecomment-1505575049 , seems like config has some compatibility issues with terraform. The same link contains a simple fix though!

s3. ACL Access Denied. We need to re-enable ACL access. Resolved be going into my s3 bucket. Under permissions go to Block public access > Edit > uncheck both "Block public access ... (ACLs)" for new ACL and any ACL. Then under Object Ownership, enable ACL.

EMR default role is missing. Solve with command line: aws emr create-default-roles

Still some errors but will keep updating as I go along

Thanks a lot Huuji!

Thanks! Was also experiencing similar issues and needed to find solutions. Did you also encounter a timed out error while setting up Redshift?

This is part of the 'make infra-up' commad

How do i get the data used?

The data is copied from an S3 bucket as part of the setup process https://github.com/josephmachado/beginner_de_project/blob/09bfcaf87834b499393592e120790db782baa38c/Makefile#L3-L5

Hey! Thank you so much for this. Learning a ton as I seek to transition from a DA role into DE.

I had some errors while runnning the make infra-up command that I was able to resolve but getting a new one that I am unable to fix. Could you please help?

Error: could not start transaction: dial tcp 52.201.48.149:5439: connect: operation timed out

with redshift_schema.external_from_glue_data_catalog,

on main.tf line 170, in resource "redshift_schema" "external_from_glue_data_catalog":

170: resource "redshift_schema" "external_from_glue_data_catalog" {

Hi, amazing work, Regarding the question in article 'will the pipeline run, if your data size increases by 10x, 100x, 1000x why? why not?' I think is because our data lake, data warehouse, or EMR can't support this amount of data. Although EMR does not necessarily need to process all the data in memory. However, if we process in batch, we do not need to process all data at once so I think the pipeline could run successfully. I would really like to know your opinion. Please correct me if you spot anything wrong. Thank you in advance and would like to contribute to your project in the future.

Thank you Sek Fook Tan. That is correct, scaling data pipelines are context dependent. The task

extract_user_purchase_dataalso doesn't scale, because we extract data to the servers local file system.I wrote an article about scaling data pipelines that can provide more ideas on how to scale https://www.startdataengineering.com/post/scale-data-pipelines/

thank you so much!

Thank you Joseph for the tutorial. But I still encounter errors in my Mac (arm64).

When I use make-cloud-airflow and make-cloud-database, the localhost web does not work.

sudo lsof -nP -iTCP -sTCP:LISTEN com.docke 17456 131u IPv6 0t0 TCP *:8080 (LISTEN) com.docke 17456 183u IPv6 0t0 TCP *:5432 (LISTEN) ssh 31315 7u IPv6 0t0 TCP [::1]:8080 (LISTEN) ssh 31315 8u IPv4 0t0 TCP 127.0.0.1:8080 (LISTEN)

Is there a conlfict in these connections?

Hello everyone,

could someone give me an idea what are the costs of running this pipeline? Thanks a lot!

Yes

hi, may I know for the > ./setup_infra.sh {your-bucket-name}

the bucket name is the bucket in the asw s3. You should have it already or the sh file will help us to create a new bracket with the name {your-bucket-name} ?

the setup_infra.sh script will create the bucket. eg

will create a bucket names my-aws-bkt. Hope this helps

I am having very early in the project. I have done the setup as instructed in Section 4.1, and I run the script given on my Windows machine that has all of the prerequisites mentioned. After the script finishes running, I log into Airflow successfully, but I do not see any of the data or variables in Airflow as they are shown in the pictures. Instead, I see a DAG error with the following message:

Broken DAG: [/opt/airflow/dags/user_behaviour.py] Traceback (most recent call last): File "/opt/airflow/dags/user_behaviour.py", line 15, in BUCKET_NAME = Variable.get("BUCKET") File "/home/airflow/.local/lib/python3.6/site-packages/airflow/models/variable.py", line 140, in get raise KeyError(f'Variable {key} does not exist') KeyError: 'Variable BUCKET does not exist'

Any ideas what might be causing this issue and how it can be fixed?

I got the same problem. If you pay attention at running the command

setup_infra.sh sde-sample-bkt, you would see the below error at the beginning:An error occurred (IllegalLocationConstraintException) when calling the CreateBucket operation: The unspecified location constraint is incompatible for the region specific endpoint this request was sent to.You can fix this by editing the filesetup_infra.shat line 26: adding argument--create-bucket-configuration LocationConstraint=us-west-2. It worked for me. You also can try with the other locations. For the details why it caused the problem, you can reference here: https://stackoverflow.com/questions/49174673/aws-s3api-create-bucket-bucket-make-exception/49174798. Hopefully it helps.It sounds like the command to add bucket to Airflow variable did not execute. This might be the result of a few things. Can you

setup_infra.sh?Different person, but I get the same error as the user to whom you replied Joseph. S3, Redshift and EMR instances were created successfully.

Airflow is showing this error: Broken DAG: [/opt/airflow/dags/user_behaviour.py] Traceback (most recent call last): File "/opt/airflow/dags/user_behaviour.py", line 15, in BUCKET_NAME = Variable.get("BUCKET") File "/home/airflow/.local/lib/python3.6/site-packages/airflow/models/variable.py", line 140, in get raise KeyError(f'Variable {key} does not exist') KeyError: 'Variable BUCKET does not exist'

What does this command do? psql -f ./redshiftsetup.sql postgres://$REDSHIFTUSER:$REDSHIFTPASSWORD@$REDSHIFTHOST:$REDSHIFT_PORT/dev

I don't see anywhere in the docs that say that this is how to connect to redshift? Thanks!

It is a cli command used to connect to redshift or postgres databases.

We set up the variables heres and the

psqlcommand allows us to run the setup queryredshift_setup.sqlin our redshift cluster.See psql for details about this command. Hope this helps. LMK if you have more questions.

Can I be receiving notes on Data Engineering please kindly

Hi Joseph, I'm getting a DAG import error:

Broken DAG: [/opt/airflow/dags/user_behaviour.py] Traceback (most recent call last): File "/opt/airflow/dags/user_behaviour.py", line 15, in <module> BUCKET_NAME = Variable.get("BUCKET") File "/home/airflow/.local/lib/python3.6/site-packages/airflow/models/variable.py", line 140, in get raise KeyError(f'Variable {key} does not exist') KeyError: 'Variable BUCKET does not exist'Wondering how I can fix this? Thanks.

Hi, Can you go to Airflow UI and see if you have the variable in there? Also can you see if there are any errors when you run

setup_infra.sh. ?If the variable is not set in Airflow UI you can use this command to try to rest it, replace

$1with your bucket name.LMK if this resolves the issues or if you have more questions.

Hey Joseph, thanks for responding so quick! The Airflow variable was not there. I set it up manually using the command you linked. One thing I figured out was that the docker container is named incorrectly. It should be

beginner_de_project-airflow-webserver-1, but the one in the script isbeginner_de_project_airflow-webserver_1. The "_" instead of "-" seem to be causing problems.It seems to be working fine after I've changed all the containers to the correct name in the script, tore down, and re-run the

./setup_infra.sh {your-bucket-name}. Airflow has no DAG errors now. I will update here if I get anymore issues.Thank you so much for catching this, I have updated it ref

Hi Joseph. When I remove -d from the command "docker compose -d" in setup_infra.sh and start setup, I see many similar errors in log of beginner_de_project-postgres-1 container such as "ERROR: relation "XXX" does not exist". Do you know why these errors happen and how can I fix them?

Hi, That seems like some tables were not created properly. Can you

Hi Joseph. When I remove -d from the command "docker compose up -d" in setup.sh and start setup, I see many similar errors such as "ERROR: relation "XXX" does not exist" in the log of beginner_de_project-postgres-1 container. Do you know why these errors happen and how to fix them?.

Hi,

I am getting the below errror while running the ./setup_infra.sh file:

Traceback (most recent call last):

File "/home/airflow/.local/bin/airflow", line 5, in

File "/home/airflow/.local/lib/python3.6/site-packages/airflow/init.py", line 46, in

File "/home/airflow/.local/lib/python3.6/site-packages/airflow/settings.py", line 446, in initialize

File "/home/airflow/.local/lib/python3.6/site-packages/airflow/logging_config.py", line 73, in configure_logging

File "/home/airflow/.local/lib/python3.6/site-packages/airflow/logging_config.py", line 68, in configure_logging

File "/usr/local/lib/python3.6/logging/config.py", line 802, in dictConfig

File "/usr/local/lib/python3.6/logging/config.py", line 573, in configure

ValueError: Unable to configure handler 'processor': [Errno 13] Permission denied: '/opt/airflow/logs/scheduler'

Hmm Im not sure, can you post a screenshot of the error? and a screenshot of the output of running

docker psHi Joseph, This issue has been resolved now. All my containers are up now and I am able to connect with them, however there were other errors while running the setup_infra.sh.

Below is the snapshot of those errors. /*Docker ps command snapshot */ [https://ibb.co/VT1C27D]

/* Error snapshot while running setup_infra.sh */

[https://ibb.co/DDxDgJG] [https://ibb.co/3WGvZhk] [https://ibb.co/sqqZHKf]

Also the AWS EMR cluster is showing some error when viewed through the AWS console. Below is the snapshot of that error.

[https://ibb.co/CBgHTy7]

Hi Pankaj,

It seems like your issues were due to docker not having enough RAM and your AWS account not having enough quota.

For docker issues: please try the new infra_setup.sh it sets up everything on AWS so you dont have to run docker locally

For not enough EMR machine you will need to request a quota increase via https://docs.aws.amazon.com/servicequotas/latest/userguide/request-quota-increase.html