Build Data Engineering Projects, with Free Template

- 1. Introduction

- 2. Run Data Pipeline

- 3. Architecture and services in this template

- 4. CI/CD setup

- 5. Putting it all together with a Makefile

- 6. Data projects using other tools and services

- 7. Conclusion

- 8. Further reading

- 9. References

1. Introduction

Setting up data infra is one of the most complex parts of starting a data engineering project. If you are overwhelmed by

Setting up data infrastructure such as Airflow, Redshift, Snowflake, etc

Trying to setup your infrastructure with code

Not knowing how to deploy new features/columns to an existing data pipeline

Dev ops practices such as CI/CD for data pipelines

Then this post is for you. This post will cover the critical concepts of setting up data infrastructure, development workflow, and a few sample data projects that follow this pattern. We will also use a data project template that runs Airflow, Postgres, & Metabase to demonstrate how each concept works.

By the end of this post, you will be able to understand how to set up data infrastructure with code, how developers work together on new features to data pipeline, & have a GitHub template that you can use for your data projects.

2. Run Data Pipeline

Code available at data_engineering_project_template repository.

2.1. Run on codespaces

You can run this data pipeline using GitHub codespaces. Follow the instructions below.

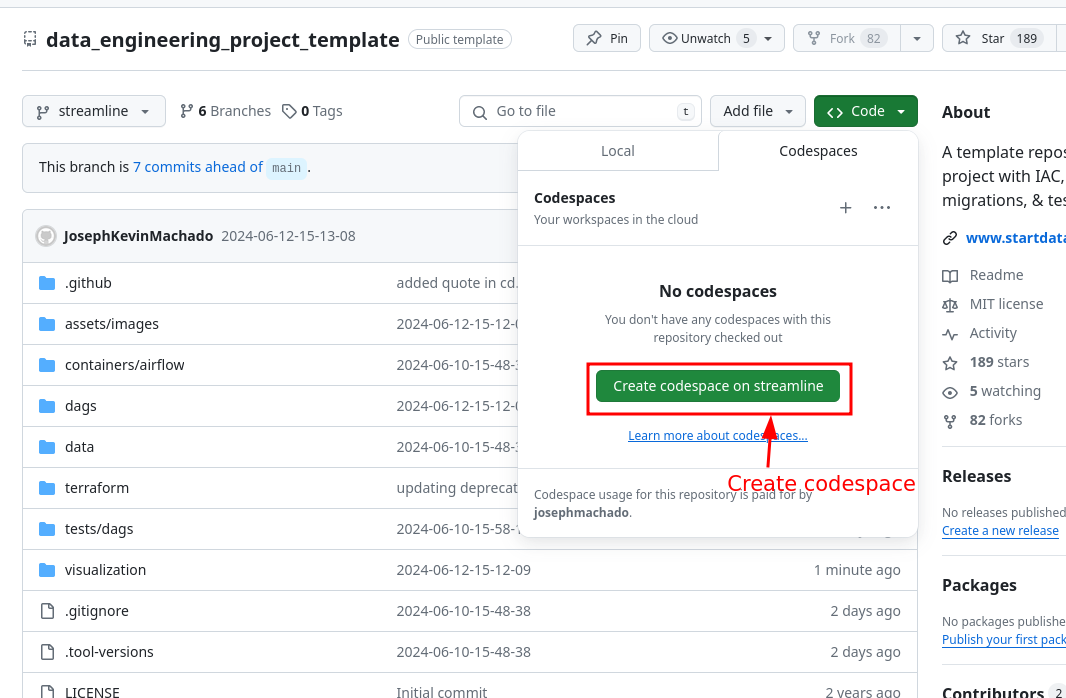

- Create codespaces by going to the data_engineering_project_template

repository, cloning it(or click

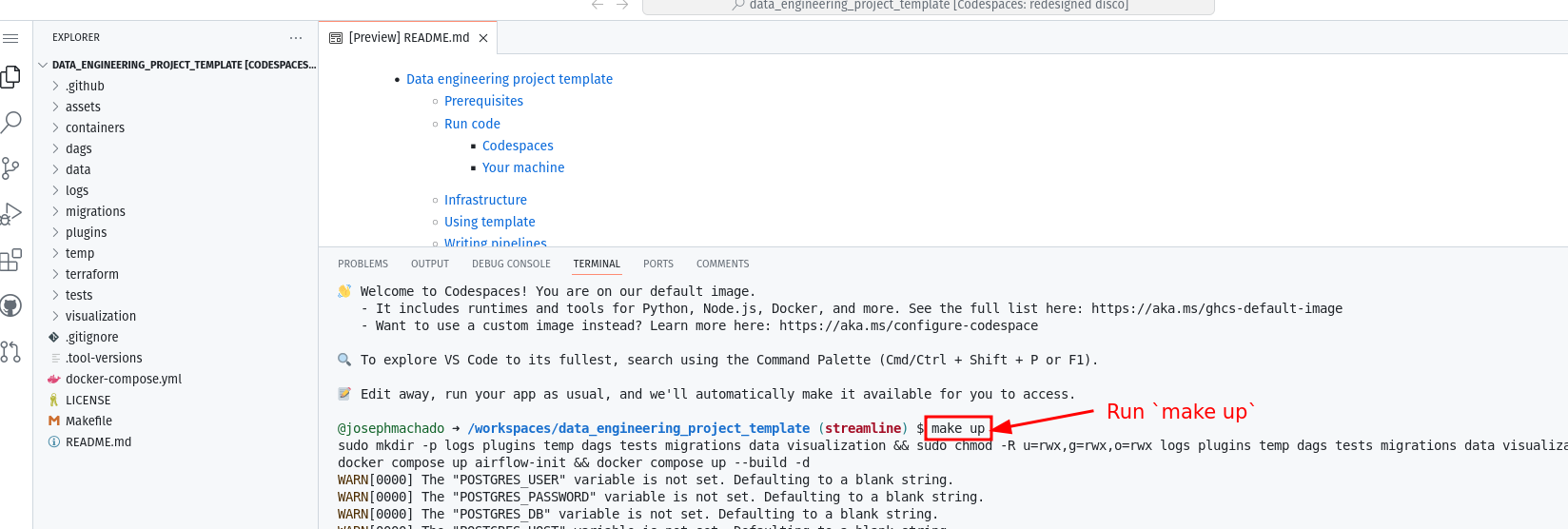

Use this templatebutton) and then clicking onCreate codespaces on mainbutton. - Wait for codespaces to start, then in the terminal type

make up. - Wait for

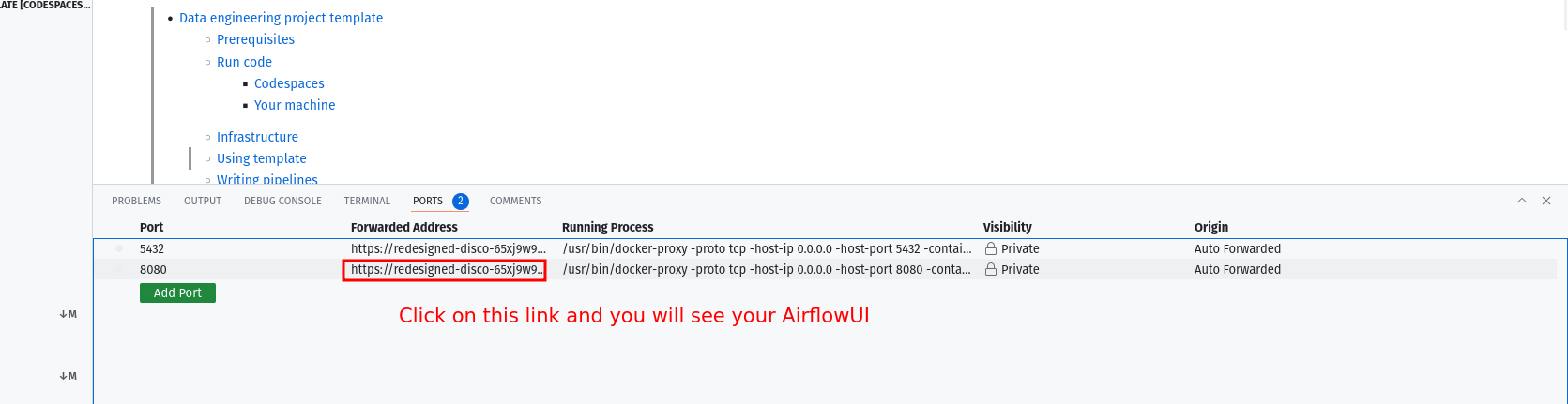

make upto complete, and then wait for 30s (for Airflow to start). - After 30s go to the

portstab and click on the link exposing port8080to access Airflow UI (username and password isairflow).

2.2. Run locally

To run locally, you need:

- git

- Github account

- Docker with at least 4GB of RAM and Docker Compose v1.27.0 or later

Clone the repo and run the following commands to start the data pipeline:

git clone https://github.com/josephmachado/data_engineering_project_template.git

cd data_engineering_project_template

make up

sleep 30 # wait for Airflow to start

make ci # run checks and tests

Go to http:localhost:8080

to see the Airflow UI. Username and password are both airflow.

3. Architecture and services in this template

This data engineering project template, includes the following:

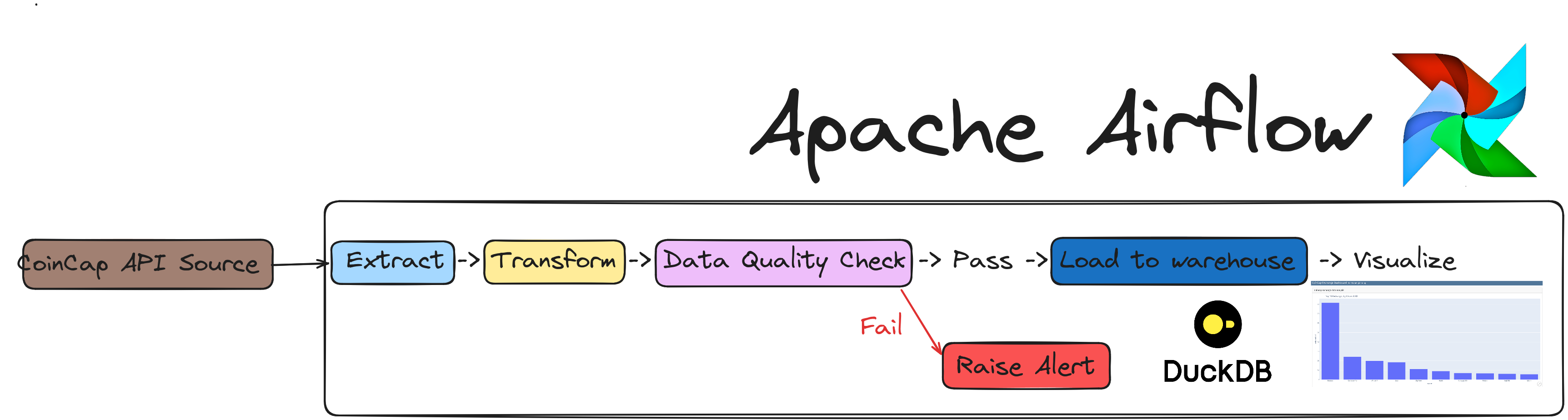

Airflow: To schedule and orchestrate DAGs.Postgres: To store Airflow’s details (which you can see via Airflow UI) and also has a schema to represent upstream databases.DuckDB: To act as our warehouseQuarto with Plotly: To convert code inmarkdownformat to html files that can be embedded in your app or servered as is.cuallee: To run data quality checks on the data we extracted from CoinCap API.minio: To provide an S3 compatible open source storage system.

For simplicity services 1-5 of the above are installed and run in one container defined here .

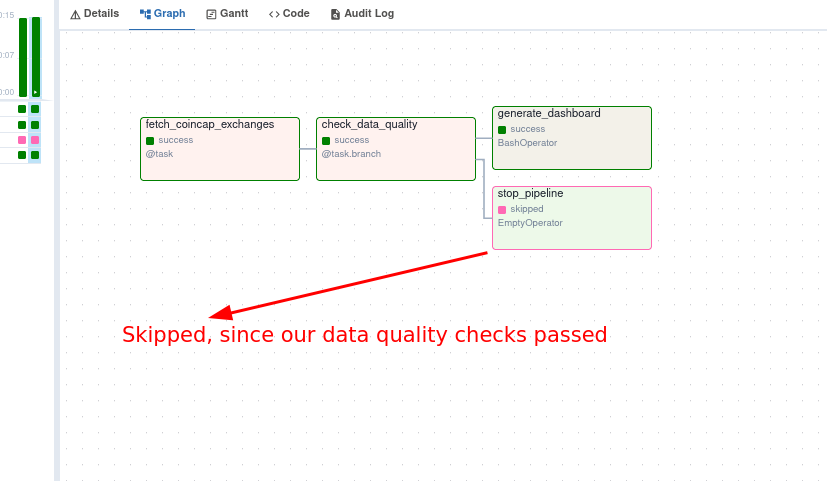

The coincap_elt DAG in the Airflow UI

will look like the below image:

You can see the rendered html at ./visualizations/dashboard.html .

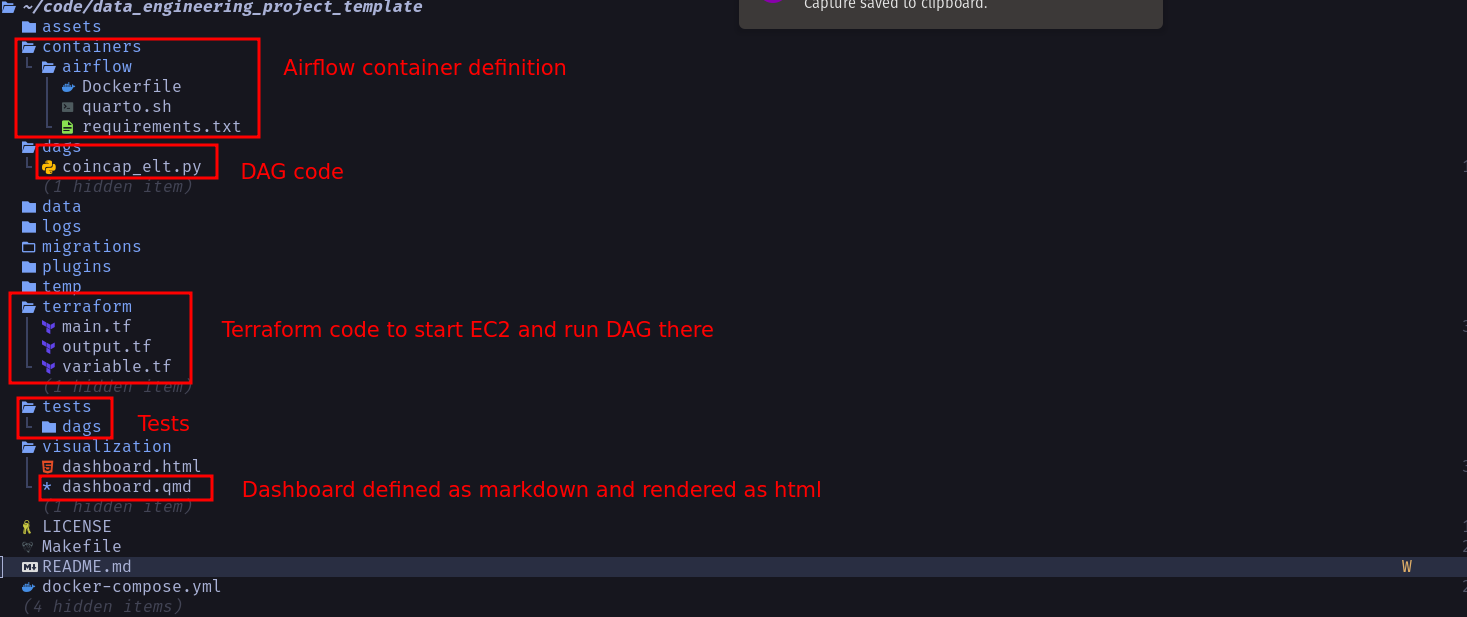

The file structure of our repo is as shown below:

4. CI/CD setup

We set up the development flow to make new feature releases easy and quick. We will use

- git for version control

- GitHub for hosting our repository, GitHub-flow for developing new features, and

- Github Actions for CI/CD.

We have the CI and CD workflows commented out , uncomment them if you want to setup CI/CD for your pipelines.

4.1. CI: Automated tests & checks before the merge with GitHub Actions

Note: Read this article that goes over how to use GitHub actions for CI .

Continuous integrations in our repository represent the automated code testing before merging into the main branch (which runs in the production server). In our template, we have defined formatting (isort, black), type checking (mypy), lint/Style checking (flake8), & python testing (pytest) as part of our ci

.

We use GitHub actions to run the checks automatically when someone creates a pull request. The CI workflow is defined in this ci.yml

file.

4.2. CD: Deploy to production servers with GitHub Actions

Continuous delivery in our repository means deploying our code to the production server. We use EC2 running docker containers as our production server; After merging into the main branch, our code is copied to the EC2 server using cd.yml

.

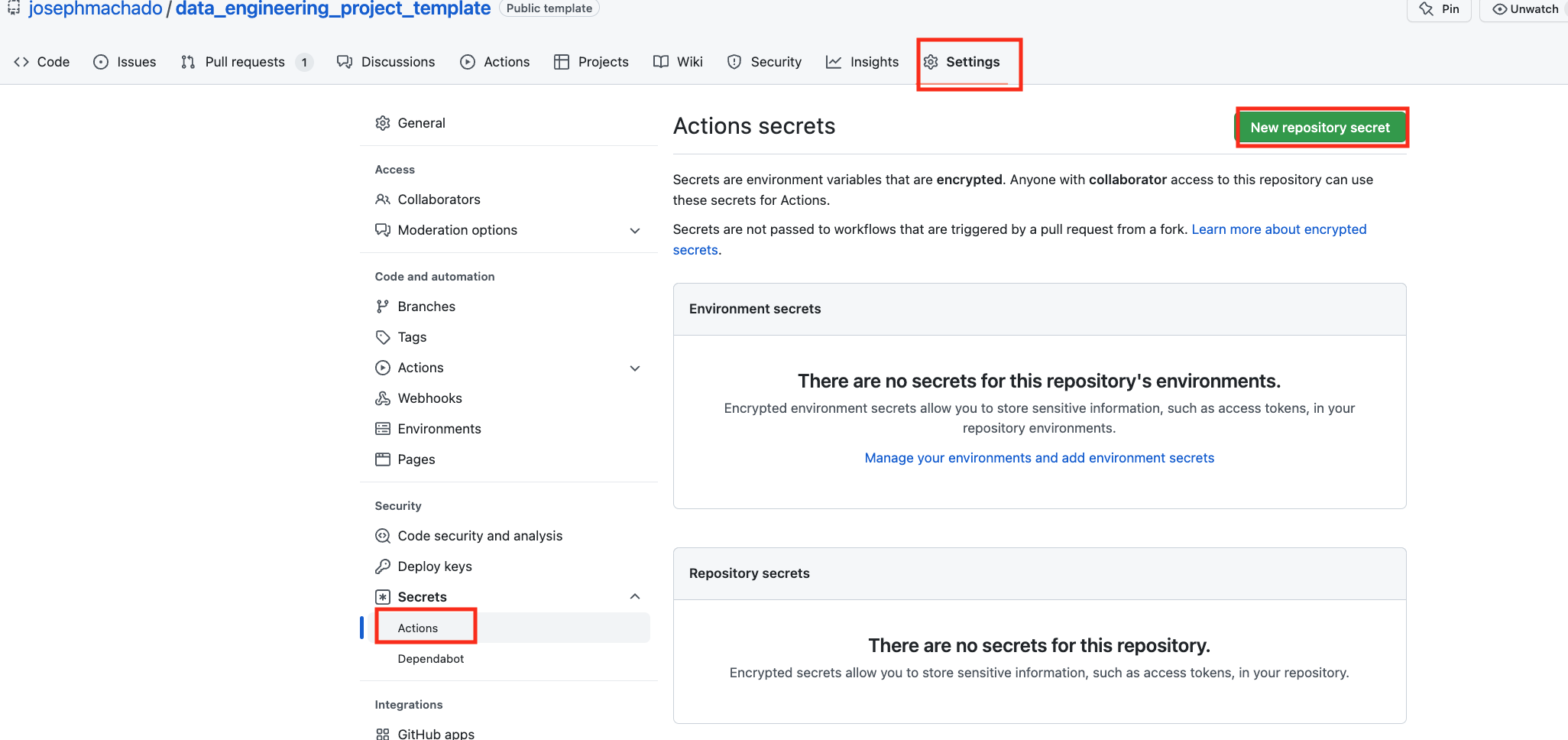

Note that for our CD to work, we will first need to set up the infrastructure with terraform, & defined the following repository secrets. You can set up the repository secrets by going to Settings > Secrets > Actions > New repository secret.

SERVER_SSH_KEY: We can get this by runningterraform -chdir=./terraform output -raw private_keyin the project directory and paste the entire content in a new Action secret calledSERVER_SSH_KEY.REMOTE_HOST: Get this by runningterraform -chdir=./terraform output -raw ec2_public_dnsin the project directory.REMOTE_USER: The value for this is ubuntu.

5. Putting it all together with a Makefile

We use a Makefile

to define aliases for the commands used during development and CI/CD.

6. Data projects using other tools and services

While an Airflow + Postgres warehouse setup might be sufficient for most practice projects, here are a few projects that use different tools with managed services.

| Component | Beginner DE project | Project to impress HM manager | dbt DE project |

|---|---|---|---|

Scheduler |

Airflow | cron | - |

Executor |

Apache Spark, DuckDB | Python process | DuckDB |

Orchestrator |

Airflow | - | dbt |

Source |

Postgres, CSV, S3 | API | flat file |

Destination |

DuckDB | DuckDB | DuckDB |

Visualization/BI tool |

Quarto | Metabase | - |

Data quality checks |

- | - | dbt tests |

Monitoring & Alerting |

- | - | - |

All of the above projects use the same tools for data infrastructure setup.

local development: Docker & Docker composeIAC: TerraformCI/CD: Github ActionsTesting: PytestFormatting: isort & blackLint check: flake8Type check: mypy

7. Conclusion

To recap, we saw

The next time you start a new data project or join an existing data team, look for these components to make developing data pipelines quick and easy.

This article helps you understand how to set up data infrastructure with code, how developers work together on new features to data pipeline, & how to use the GitHub template for your data projects.

If you have any questions or comments, please leave them in the comment section below.

8. Further reading

- Creating local end-to-end tests

- Data testing

- Beginner DE project

- Project to impress HM

- End-to-end DE project

9. References

If you found this article helpful, share it with a friend or colleague using one of the socials below!

Hello Joseph! Great post !!

But why while creating the EC2 instance with terraform did you clone the git repo before running the command??

Didn't you already deploy the code to that EC2 using the CD.yml with github actions??

Hi Dorian,

Thank you So there are 2 concepts here

The ci cd process is meant to be a continuous process so it’ll deploy the new code every time

Hope this helps. LMK if you have more questions

That makes sense, thanks for your response.

It was really helpful!!

Hi Joseph,

Great post and content! I believe the port forwarding isn't working. I used the non-forwarded ports to connect to airflow and metabase and they worked. as well as the non-forwarded postgres port to connect the db to metabase.

Hi Arun, Thank you for the kind words! Do you mean you connected to AF and Metabase with 8080 and 3000 respectively?

Yes this is what worked for me as well. I first got the messages saying 8081 and 3001 were already used but when I opened them manually in my browser, nothing would show. I then was just curious and tried visiting 8080 and 3000 and it worked.

Additionally, in Metabase, using port 5439 would not work. However when I left this field blank, it allowed me to move on. Not sure if I'm seeing everything I'm supposed to but I atleast got through steps 1 and 2 now. Looking forward to going through the next steps and really digging in so I can understand everything.

Thank you for all you do!!

Thank you Aaron, I will look into fixing the port forwarding issue/make it clearer to understand in the docs. GH issue: https://github.com/josephmachado/data_engineering_project_template/issues/20

Hi, I wanted to create pull request for this project, how can I do that? I wanted to do that by comparing different across different forks, but there wasn't my repo.

This is really interesting stuff, only problem is that it is really condensed.

That's a fair point, I made it condensed deliberately, this was because I wanted the reader to read and do the project ASAP. But you are right, some of these can be individual posts by themselves. Is there any topic in particular you'd like to see expanded upon?

In section 2.2 "Run the following commands in your project directory." I have a windows system and ran the command "make up" in cmd, however, "make" was not recognized and I had to install "make-3.81.exe" for the command to work. And then "'sudo' is not recognized as an internal or external command, operable program or batch file.", just wondering if I ran the command in the right place or if the tutorial was not designed for windows users.

Hi, For windows I'd recommend using WSL https://ubuntu.com/tutorials/install-ubuntu-on-wsl2-on-windows-10#1-overview The commands are meant to be run in a linux env. Sorry about the inconvenience.

Hi, thank you so much for the post, it is the most detailed procedure I can find online. I really appreciate your effort to make this available.

Hi Zoomjin, I've created an issue, I'll try to get instructions for windows soon. Issue: https://github.com/josephmachado/data_engineering_project_template/issues/7

Hi Joseph, Thank you so much for your dedication to getting this project available to the public, especially for newbies like me lol. I am now using Ubuntu to set up the infra. Currently at step 2.2, past 'make infra-up', I checked AWS EC2, status is 'running', '2/2 checks passed'. However, in the next step 'make cloud-airflow', I am receiving the message

'hostkeys_find_by_key_hostfile: hostkeys_foreach failed for /home/zoomjin/.ssh/known_hosts: Permission denied The authenticity of host 'ec2-100-25-159-193.compute-1.amazonaws.com (100.25.159.193)' can't be established. ED25519 key fingerprint is . //I have deleted the fingerprint in case it is case-sensitive. This key is not known by any other names Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Failed to add the host to the list of known hosts (/home/zoomjin/.ssh/known_hosts). @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ @ WARNING: UNPROTECTED PRIVATE KEY FILE! @ @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ Permissions 0777 for 'private_key.pem' are too open. It is required that your private key files are NOT accessible by others. This private key will be ignored. Load key "private_key.pem": bad permissions ubuntu@ec2-100-25-159-193.compute-1.amazonaws.com: Permission denied (publickey). make: *** [Makefile:72: cloud-airflow] Error 255'

Did you ever find a solution for this? I'm running into the same issue.

Hi, can you try to change the 600 to 400, so use chmod 400 private_key.pem in place and chmod 600. Please LMK if this works, if not please open an issue at https://github.com/josephmachado/data_engineering_project_template/issues, i'll take a look.

https://superuser.com/questions/1323645/unable-to-change-file-permissions-on-ubuntu-bash-for-windows-10/1323647#1323647 Basil's answer is what worked for me on windows... had to restart my computer too but worked like a charm.

Hi. I'm using Ubuntu @ WSL 2. In my case I was able to fix it by adding "sudo" before ssh in each related command in the Makefile.

I apologize for the long post. I have tried to resolve the posted issue, by using chmod 600 to change the permission, however, I see that was already set up in your file. I have tried to change the file security setting directly on my computer by disabling inheritance and removing groups having access to the file.

I have the same error, were you able to resolve this?

Hi, can you try to change the 600 to 400, so use

chmod 400 private_key.pemin place and chmod 600. Please LMK if this works, if not please open an issue at https://github.com/josephmachado/data_engineering_project_template/issues, i'll take a look.Hey i am trying to set this project up and have a problem. When i am trying to make cloud-airflow. The new window is opened in browser but there is nothing here. Also need to say that the instance is running, at least it seems to be runned in aws ec2 instances ("https://us-east-1.console.aws.amazon.com/ec2/home?region=us-east-1#Instances:"). What can i do to see airflow tab? Also have the same problem with make cloud-metabase. I think that there is something with connection to the instance.

Hi, Sorry that you are having issues, can you try the following

make ssh-ec2(https://github.com/josephmachado/data_engineering_project_template/blob/4873f2d3c887a2894de268e1bbbed7f1fed4cb36/Makefile#L77) this will ssh you into your ec2 instance.docker psto see if Airflow and metabase are running. If not, can you do amake infra-downandmake infra-upfrom your local machine. If the issue persists please open an issue here https://github.com/josephmachado/data_engineering_project_template/issues I'll take a look.This is really great! I love how it provides a high-level abstraction of setting up data infra. I am in particular interested in the database migration part.I am working on a project where I will need to sync database schema in one account with one in anther account for testing purpose. Could you offer some insights on this topic or maybe expand on the topic of database migration and sync in your future posts? Thanks!

Hi,

I am running windows and using wsl. I am struggling with the step 'make cloud-airflow' I keep getting WARNING: UNPROTECTED PRIVATE KEY FILE!

When I run chmod 400 private_key.pem I get the following message:

terraform -chdir=./terraform output -raw private_key > private_key.pem && chmod 600 private_key.pem && ssh -o "IdentitiesOnly yes" -i private_key.pem ubuntu@$(terraform -chdir=./terraform output -raw ec2_public_dns) -N -f -L 8081:$(terraform -chdir=./terraform output -raw ec2_public_dns):8080 && open http://localhost:8081 && rm private_key.pem /bin/sh: 1: cannot create private_key.pem: Permission denied make: *** [Makefile:72: cloud-airflow] Error 2

When I run chmod 600 private_key.pem I get the following:

terraform -chdir=./terraform output -raw private_key > private_key.pem && chmod 600 private_key.pem && ssh -o "IdentitiesOnly yes" -i private_key.pem ubuntu@$(terraform -chdir=./terraform output -raw ec2_public_dns) -N -f -L 8081:$(terraform -chdir=./terraform output -raw ec2_public_dns):8080 && open http://localhost:8081 && rm private_key.pem @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ @ WARNING: UNPROTECTED PRIVATE KEY FILE! @ @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ Permissions 0777 for 'private_key.pem' are too open. It is required that your private key files are NOT accessible by others. This private key will be ignored. Load key "private_key.pem": bad permissions ubuntu@ec2-54-243-24-129.compute-1.amazonaws.com: Permission denied (publickey). make: *** [Makefile:72: cloud-airflow] Error 255

Any advice on how I can get this working?

Hi Chileshe, This may have to do with some windows WSL setting, I don't have a windows system to test this, can you please try the solutions provided here https://superuser.com/questions/1321072/ubuntu-on-windows-10-ssh-permissions-xxxx-for-private-key-are-too-open

No luck so far, think I might just try this on a macbook

I'm having trouble understanding where to run the "makeup", "make ci", "make tf-init" etc. commands in WSL. I just create an empty folder for my project but was unable to understand where to run these commands.

Hi Sreepad, Sorry that wasn't clear (I have updated the info). If you have set up WSL (using the link provided) you will be able to run ubuntu, in ubuntu please open a terminal (see instructions: https://www.howtogeek.com/686955/how-to-launch-a-terminal-window-on-ubuntu-linux/) and run these commands via this terminal.

Note: Make sure you are in the cloned repository folder on your terminal. LMK if you have any questions.

Thank you, Joseph.

Does anyone get into trouble when running the script in the section 2.2? First of all, when cloning the template into my local machine, I tried "make up". However, I encountered the error "Error response from daemon: Ports are not available: exposing port TCP 0.0.0.0:8080 -> 0.0.0.0:0: listen tcp 0.0.0.0:8080: bind: Only one usage of each socket address (protocol/network address/port) is normally permitted. make: *** [Makefile:5: docker-spin-up] Error 1". After that, I fixed this by changing the mapped port of the airflow webserver to 8083:8080 like this: "airflow-webserver: <<: *airflow-common container_name: webserver command: webserver ports: - 8083:8080 healthcheck: test: [ "CMD", "curl", "--fail", "http://localhost:8083/health" ] interval: 10s timeout: 10s retries: 5 restart: always" Then, every went smoothly until the command "make cloud-airflow", which showed here: "terraform -chdir=./terraform output -raw private_key > private_key.pem && chmod 600 private_key.pem && ssh -o "IdentitiesOnly yes" -i private_key.pem ubuntu@$(terraform -chdir=./terraform output -raw ec2_public_dns) -N -f -L 8081:$(terraform -chdir=./terraform output -raw ec2_public_dns):8080 && open http://localhost:8081 && rm private_key.pem bind [127.0.0.1]:8081: Address already in use channel_setup_fwd_listener_tcpip: cannot listen to port: 8081 Could not request local forwarding. /bin/sh: 1: open: not found make: *** [Makefile:72: cloud-airflow] Error 127" I'm a beginner here so I know that this is quite a stupid question. However, I appreciate that you guys can help me out! Thanks a lot in advance!

Hi Josheph,

Thank you for a fantastic template. It helps me so much to start my DE project.

May I ask you a question: as I understand, I clone this template to my local machine and start developing pipeline. When I want to deploy my project in the production server. I need to log in the production server and clone the project. Then run it there. Do I understand you right? I am asking because I am quite confused of how a DE project runs, especially in real life.

Hello Joseph, Thank you for making this project with sufficient detail and for responding to questions. I have run into an error when running the command: make cloud-airflow. I don't think I'm having the same error as the others with this command. Please let me know what to do.

$ make cloud-airflow

terraform -chdir=./terraform output -raw private_key > private_key.pem && chmod 600 private_key.pem && ssh -o "IdentitiesOnly yes" -i private_key.pem ubuntu@$(terraform -chdir=./terraform output -raw ec2_public_dns) -N -f -L 8081:$(terraform -chdir=./terraform output -raw ec2_public_dns):8080 && open http://localhost:8081 && rm private_key.pem bind [127.0.0.1]:8081: Address already in use channel_setup_fwd_listener_tcpip: cannot listen to port: 8081 Could not request local forwarding. Warning: unknown mime-type for "http://localhost:8081" -- using "application/octet-stream" Error: no such file "http://localhost:8081" make: *** [Makefile:72: cloud-airflow] Error 2

This means another process is already using port 8081, can you open http://localhost:8081 on your browser?

I am facing the same issue here Joseph. No, I am not able to open http://localhost:8081 on my brower.

how can i replicate this in GCP

This uses an EC2 instance, on GCP you can setup a VM instance and do the same. GCP: https://cloud.google.com/compute/ I'd recommend starting manually and then automating with terraform

Hi, I tried updating cd.yml with my repository name, however it gives me an error saying it failed to deploy to server, where the three inputs sshPrivatekey, remotehost and remoteuser is mandotory and the input is invalid. Is this normal or did I make some mistakes when configuring AWS CLI? Thanks