Designing a Data Project to Impress Hiring Managers

- Introduction

- Objective

- Setup

- Project

- Future Work

- Tear down infra

- Conclusion

- Further Reading

- References

Introduction

Building a data project for your portfolio is hard. Getting hiring managers to read through your Github code is even harder. If you are building data projects and are

disappointed that no one looks at your Github projects

frustrated that recruiters don’t take you seriously as you don’t have a lot of work experience

Then this post is for you. In this post, we go one way to design a data project to impress a hiring manager and showcase your expertise. The main theme of this endeavor is show not tell, since you only get a few minutes(if not seconds) of the hiring manager’s time.

Objective

When starting a project, it’s a good idea to work backward from your end goal. In our case, the main goal is to impress the hiring manager. This can be done by

- Linking your dashboard URL in your resume and Linkedin.

- Hosting a live dashboard that is fed by near real-time data.

- Encouraging the hiring manager to look at your Github repository.

- Concise and succinct

README.md. - Architecture diagram.

- Project organization.

- Coding best practices: Test, lint, types, and formatting.

You want to showcase your expertise to the hiring manager, without expecting them to read through your codebase. In the following sections, we will build out a simple dashboard that is populated by near real-time bitcoin exchange data. You can use this as a reference to build your dashboards.

Setup

Pre-requisites

- git

- Github account

- Terraform

- AWS account

- AWS CLI installed and configured

- Docker with at least 4GB of RAM and Docker Compose v1.27.0 or later

Read this post , for information on setting up CI/CD, DB migrations, IAC(terraform), “make” commands and automated testing.

Run these commands to setup your project locally and on the cloud.

# Clone the code as shown below.

git clone https://github.com/josephmachado/bitcoinMonitor.git

cd bitcoinMonitor

# Local run & test

make up # start the docker containers on your computer & runs migrations under ./migrations

make ci # Runs auto formatting, lint checks, & all the test files under ./tests

# Create AWS services with Terraform

make tf-init # Only needed on your first terraform run (or if you add new providers)

make infra-up # type in yes after verifying the changes TF will make

# Wait until the EC2 instance is initialized, you can check this via your AWS UI

# See "Status Check" on the EC2 console, it should be "2/2 checks passed" before proceeding

make cloud-metabase # this command will forward Metabase port from EC2 to your machine and opens it in the browser

You can connect metabase to the warehouse with the configs in the env file. Refer to this doc for creating a Metabase dashboard.

Create database migrations as shown below.

make db-migration # enter a description, e.g., create some schema

# make your changes to the newly created file under ./migrations

make warehouse-migration # to run the new migration on your warehouse

For the continuous delivery

to work, set up the infrastructure with terraform, & defined the following repository secrets. You can set up the repository secrets by going to Settings > Secrets > Actions > New repository secret.

SERVER_SSH_KEY: We can get this by runningterraform -chdir=./terraform output -raw private_keyin the project directory and paste the entire content in a new Action secret called SERVER_SSH_KEY.REMOTE_HOST: Get this by runningterraform -chdir=./terraform output -raw ec2_public_dnsin the project directory.REMOTE_USER: The value for this is ubuntu.

Project

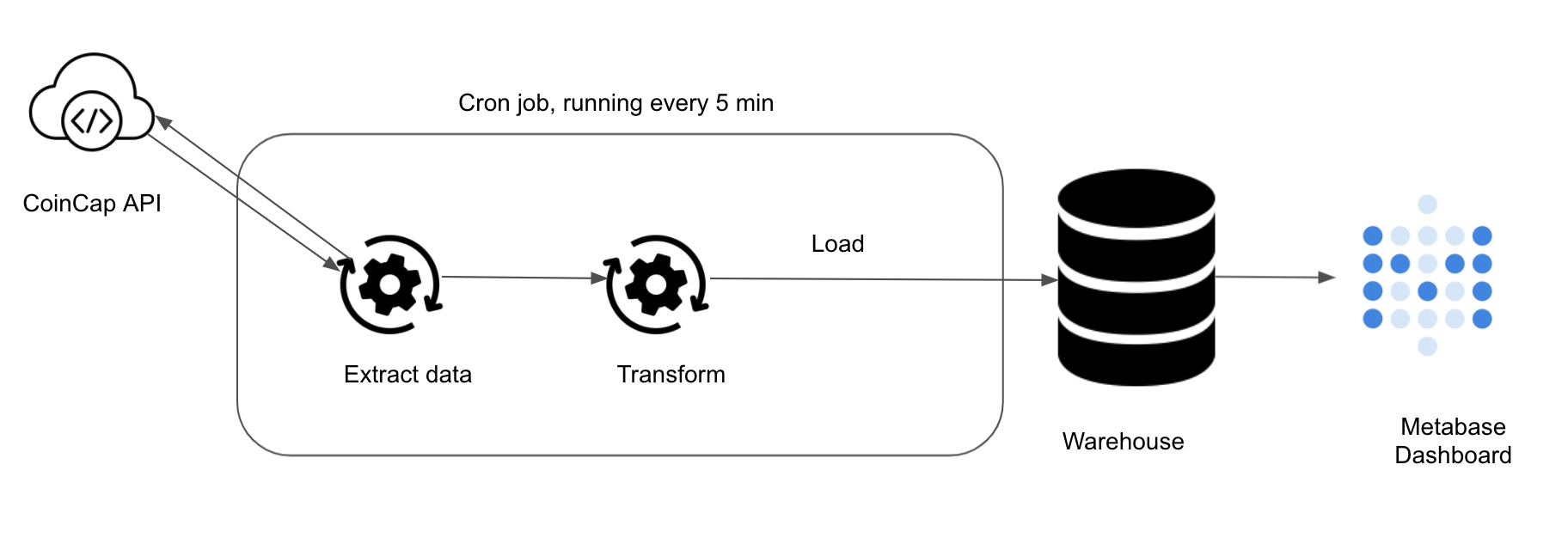

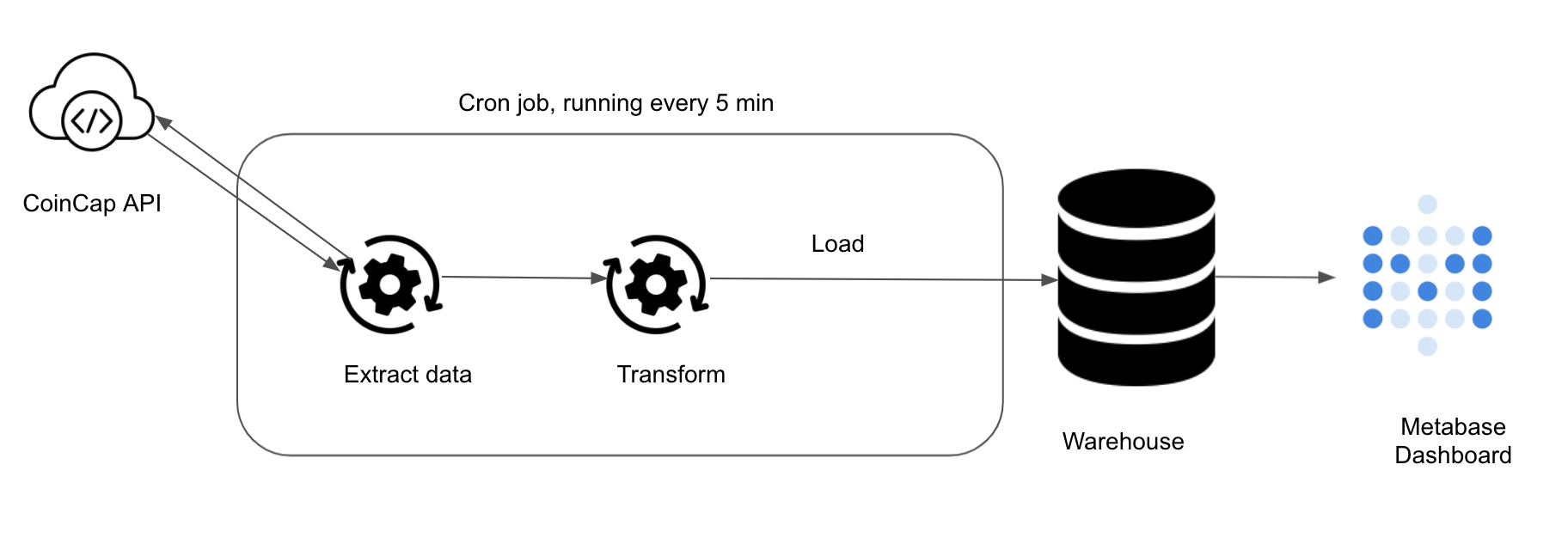

For our project, we will pull bitcoin exchange data from CoinCap API . We will pull this data every 5 minutes and load it into our warehouse.

1. ETL Code

The code to pull data from CoinCap API and load it into our warehouse is at exchange_data_etl.py . In this script we

- Pull data from CoinCap API

using the

get_exchange_datafunction. - Use

get_utc_from_unix_timefunction to get UTC based date time from unix time(in ms). - Load data into our warehouse using the

_get_exchange_insert_queryinsert query.

def run() -> None:

data = get_exchange_data()

for d in data:

d['update_dt'] = get_utc_from_unix_time(d.get('updated'))

with WarehouseConnection(**get_warehouse_creds()).managed_cursor() as curr:

p.execute_batch(curr, _get_exchange_insert_query(), data)

Ref: API data pull best practices

There are a few things going on at “with WarehouseConnection(**get_warehouse_creds()).managed_cursor() as curr:".

- We use the get_warehouse_creds utility function to get the warehouse connection credentials.

- The warehouse connection credentials are stored as environment variables within our docker compose definition. The docker-compose uses the hardcoded values from the env file.

- The credentials are passed as **kwargs to the WarehouseConnection class.

- The WarehouseConnection

class uses

contextmanagerto enable opening and closing the DB connections easier. This lets us access the DB connection without having to write boilerplate code.

def get_warehouse_creds() -> Dict[str, Optional[Union[str, int]]]:

return {

'user': os.getenv('WAREHOUSE_USER'),

'password': os.getenv('WAREHOUSE_PASSWORD'),

'db': os.getenv('WAREHOUSE_DB'),

'host': os.getenv('WAREHOUSE_HOST'),

'port': int(os.getenv('WAREHOUSE_PORT', 5432)),

}

class WarehouseConnection:

def __init__(

self, db: str, user: str, password: str, host: str, port: int

):

self.conn_url = f'postgresql://{user}:{password}@{host}:{port}/{db}'

@contextmanager

def managed_cursor(self, cursor_factory=None):

self.conn = psycopg2.connect(self.conn_url)

self.conn.autocommit = True

self.curr = self.conn.cursor(cursor_factory=cursor_factory)

try:

yield self.curr

finally:

self.curr.close()

self.conn.close()

2. Test

Tests are crucial if you want to be confident about refactoring code, adding new features, and code correctness. In this example, we will add 2 major types of tests.

- Unit test: To test if individual functions are working as expected. We test

get_utc_from_unix_timewith the test_get_utc_from_unix_time function. - Integration test: To test if multiple systems work together as expected.

For the integration test we

- Mock the Coinbase API call using the

mockerfunctionality of thepytest-mocklibrary. We use fixture data attest/fixtures/sample_raw_exchange_data.csvas a result of an API call. This is to enable deterministic testing. - Assert that the data we store in the warehouse is the same as we expected.

- Finally the

teardown_methodtruncates the local warehouse table. This is automatically called by pytest after thetest_covid_stats_etl_runtest function is run.

class TestBitcoinMonitor:

def teardown_method(self, test_covid_stats_etl_run):

with WarehouseConnection(

**get_warehouse_creds()

).managed_cursor() as curr:

curr.execute("TRUNCATE TABLE bitcoin.exchange;")

def get_exchange_data(self):

with WarehouseConnection(**get_warehouse_creds()).managed_cursor(

cursor_factory=psycopg2.extras.DictCursor

) as curr:

curr.execute(

'''SELECT id,

name,

rank,

percenttotalvolume,

volumeusd,

tradingpairs,

socket,

exchangeurl,

updated_unix_millis,

updated_utc

FROM bitcoin.exchange;'''

)

table_data = [dict(r) for r in curr.fetchall()]

return table_data

def test_covid_stats_etl_run(self, mocker):

mocker.patch(

'bitcoinmonitor.exchange_data_etl.get_exchange_data',

return_value=[

r

for r in csv.DictReader(

open('test/fixtures/sample_raw_exchange_data.csv')

)

],

)

run()

expected_result = [

{"see github repo for full data"}

]

result = self.get_exchange_data()

assert expected_result == result

See How to add tests to your data pipeline article to add more tests to this pipeline. You can run tests using

make up # to start all your containers

make pytest

3. Scheduler

Now that we have the ETL script and tests setup. We need to schedule the ETL script to run every 5 minutes. Since this is a simple script we will go with cron instead of setting up a framework like Airflow or Dagster. The cron job is defined at scheduler/pull_bitcoin_exchange_info

SHELL=/bin/bash

HOME=/

*/5 * * * * WAREHOUSE_USER=sdeuser WAREHOUSE_PASSWORD=sdepassword1234 WAREHOUSE_DB=finance WAREHOUSE_HOST=warehouse WAREHOUSE_PORT=5432 PYTHONPATH=/code/src /usr/local/bin/python /code/src/bitcoinmonitor/exchange_data_etl.py

This file is placed inside the pipelinerunner docker container’s crontab

location. You may notice that we have hardcoded the environment variables. Not having the environment variables hardcoded in this file is part of future work

.

4. Presentation

Now that we have the code and scheduler set up, we can add checks and formatting automation to ensure that we follow best practices. This is what a hiring manager will be exposed to, when they look at your code. Ensuring that the presentation is clear, concise, and consistent is crucial.

4.1. Formatting, Linting, and Type checks

Formatting enables us to stay consistent with the code format. We use black

and isort

to automate formatting. The -S black module flag ensures that we use single quotes for strings (following PEP8).

Linting analyzes the code for potential errors and ensures that the code formatting is consistent. We use flake8 to lint check our code.

Type checking enables us to catch type errors (when defined). We use mypy for this.

All of these are run within the docker container. We use a Makefile to store shortcuts to run these commands.

4.2. Architecture Diagram

Instead of having a long text, it is usually easier to understand the data flow with an architecture diagram. It does not have to be beautiful, but must be clear and understandable. Our architecture diagram is shown below.

4.3. README.md

The readme should be clear and concise. It’s a good idea to have sections for

- Description of the problem

- Architecture diagram

- Setup instructions

You can automatically format and test your code with

make ci # this command will format your code, run lint and type checks and run all your tests

After which, you can push it to your Github repository.

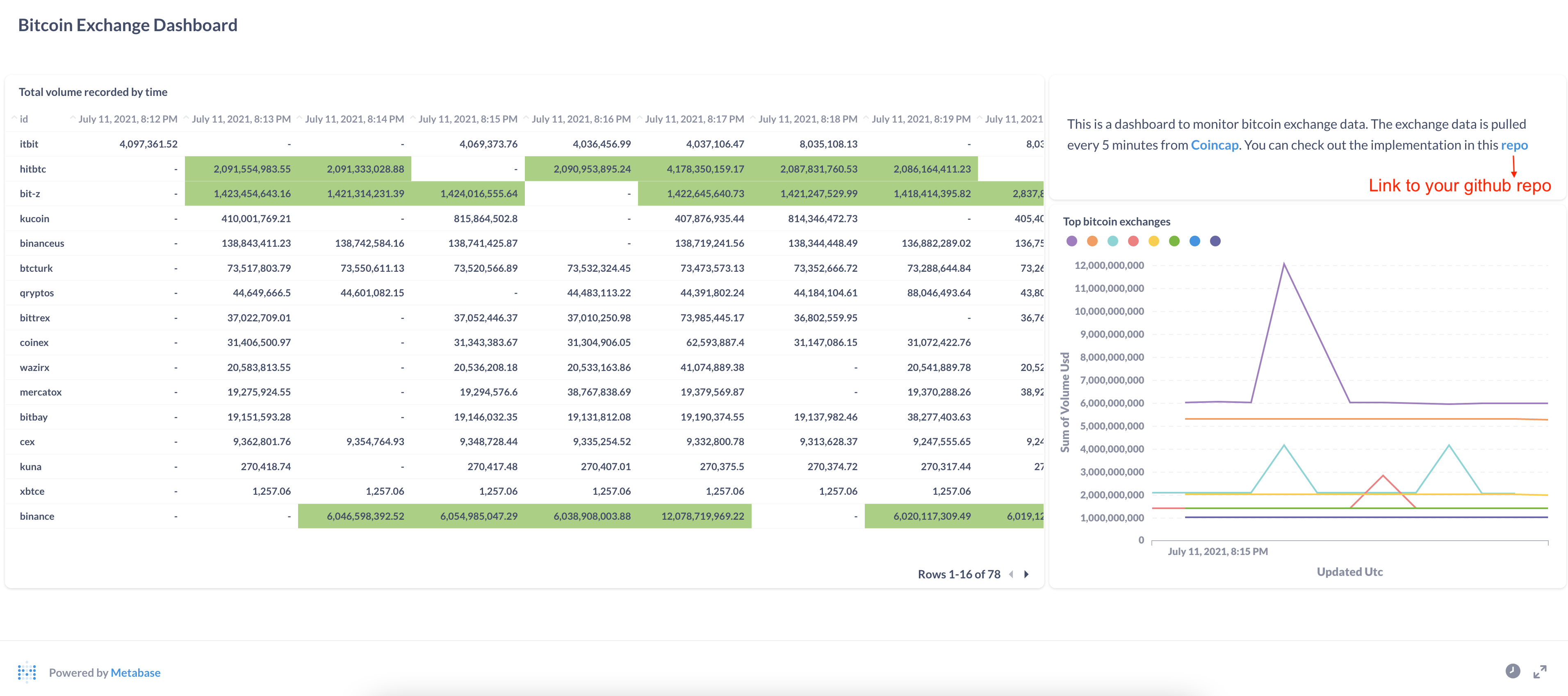

5. Adding Dashboard to your Profile

Refer to Metabase documentation on how to create a dashboard . Once you create a dashboard, get its public link following the steps here . Create a hyperlink to this dashboard from your resume or LinkedIn page. You can also embed the dashboard as an iframe on any website.

A sample dashboard using bitcoin exchange data is shown below.

Depending on the EC2 instance type you choose you may occur some cost. Use AWS cost calculator to figure out the cost.

Future Work

Although this provides a good starting point, there is a lot of work to be done. Some future work may include

- Data quality testing

- Better scheduler and workflow manager to handle backfills, reruns, and parallelism

- Better failure handling

- Streaming data from APIs vs mini-batches

- Add system env variable to crontab

- Data cleanup job to remove old data, since our Postgres is running on a small EC2 instance

- API rate limiting

Tear down infra

After you are done, make sure to destroy your cloud infrastructure.

make down # Stop docker containers on your computer

make infra-down # type in yes after verifying the changes TF will make

Conclusion

Building data projects are hard. Getting hiring managers to read through your Github code is even harder. By focusing on the right things, you can achieve your objective. In this case, the objective is to show your data skills to a hiring manager. We do this by making it extremely easy for the hiring manager to see the end product, code, and architecture.

Hope this article gave you a good idea of how to design a data project to impress a hiring manager. If you have any questions or comments please leave them in the comment section below.

Further Reading

- Airflow scheduling

- Beginner DE project: batch

- Adding data tests

- API data pull using lambda

- dbt, getting started

References

- AWS EC2 connection issues

- Ubuntu docker install

- Crontab env variables

- Metabase documentation

- Coincap API

If you found this article helpful, share it with a friend or colleague using one of the socials below!

Thank you! Clean and concise blog, excellent work. It really helped me to understand some of the key areas within a data pipeline. Keep up the great work.

Awesome post, exactly what I was looking for, really clearly explained for a DE noob like me!

really impressed by your work and documentation! Keep up the good work!

Hi... lovely article (post).

Just curious, what tool did you use for the architecture diagram?

Thank you

Hi, I use google slides + nounproject(for icons)

Hey, great content and i wish i found it sooner! Im wondering how would i go into loading the response from the api into a dimensional model ? I mean, practically, how do i populate dimension and fact tables from data in a dataframe for example ? I really couldnt find any practical examples. I've thought of some ways but they didnt sound very efficient

hey, Glad you found the content. If I understand correctly, you are asking for a way to load data efficiently into fact/dimension tables. There are a few ways depending on your use case. Most commonly

See this article to go over a few ways to load data into data warehouse.

See this article to use CDC to load data into a dim table.

Let me know if this helps or if I misunderstood your question.

Thank you for the response! After further research and reading the links above i found out that what i wanted to know is how to go from flat table to a star schema. Some links that helped clear that up for me:

https://www.sqlservercentral.com/forums/topic/loading-150-million-flat-structure-data-into-star-schema

https://community.powerbi.com/t5/Desktop/Terrible-performance-when-normalizing-a-flat-table-in-Power/m-p/490265

Thank you again and keep up the amazing posts!

So I'm kinda confused about one thing. The code is already all written and we're only supposed to clone the repo and set up the stuff? And then we pass off this project as our own? I'm misunderstanding something right?

Yep this is supposed to be a framework that one can use to build their own project. I will make that clearer.

Nice project Joseph. One concern though. I've been able to run the containers and logged in to metabase however the actual data is missing from the database. The database shows in metabase but the data itself is missing. What could be wrong?

Thanks Gregory. If the data is showing up on metabase that means that the data is present in the database. Could you explain what you mean by data is missing from the database? and how you checked for data in the database? I can try to help with more info.

Hi Joseph, I have a similar problem to Gregory-Essuman. From Metabase, I can see that the table exchange with proper columns exists, but it has no data. It is the same (empty) when I'm checking the context on the data with the CLI of the image. Also, the logs of the pipelinerunner image are empty and I'm not sure if it should be like that. Would really appreciate any help with that. If this seems to you like some more complex problem and you would be willing to help me with that, I can also contact you somewhere also to make the communication more efficient.

Hi, Can you let me know what you see when you type the command

crontab -lin your VM ? I think the cron job that executes the script every 5min is not running.I had a similar issue, and I think it relates to cron execution rules. I have created another script that calls

run()function frombitcoinmonitor.exchange_data_etland put it intosrcfolder in the project and called this new script from the cron and it worked.Just in case someone runs into my issue....

I assumed Cron wasn't running because the first line of data would aways show the values from when I first spun up the containers. So I thought the updates weren't happening.. Went down a rabbit hole.... thought it was because I'm on windows...

anyways, FYI if you scroll down in the Metabase table, you'll see the updates are coming through but are just at the end of the table.. filtering to one exchange ID makes this clearer. also fyi , you can create a pivot table by selecting the 'visuals' option.

With that said..... my Logs for the 'pipelinerunner' container still do not show in Docker Desktop.... Probably would've helped my not go so far down the rabbit hole but oh well. learned alot in the proccess!

Hello, thanks a lot for this project. I will try it on my own. However, i have a question about the "Transform" here. In almost all ETL that I see, I never saw transformation step. What transformation is supposed to be? For example, in this project what would be the transformation step please?

Great content, thanks!

Very good tutorial, thanks! Metabase is not free - are there any alternatives you recommend?

thank you Petre. Metabase is open source and can be self hosted, which is what we are doing. They also have some paid plans https://www.metabase.com/pricing/ Hope this clear things up

Thanks for your reply Joseph. I am unable to connect from within the metabase container into the postgres one although I can connect into it using Pgadmin. The ports are mapped as expected within the postgres container. Do you have any idea what might be the issue? I'm getting from metabase: "Database name: Connection to :5432 refused. Check that the hostname and port are correct and that the postmaster is accepting TCP/IP connections."

--- Meanwhile found the answer to my question at: https://stackoverflow.com/a/56334518/10496082 --- :)

Hi Petre, You will have to set host as

warehouse. Basically use this file https://github.com/josephmachado/bitcoinMonitor/blob/main/env to get the required fields when connecting to postgres from Metabase UI at http://localhost:3000 . lmk if that resolves your issue.I was using the right credentials set within the env file but I was mixing things up regarding localhost. I got it working by checking the warehouse container address and pointing to that one instead of my machine's localhost. Thanks for your quick replies!

Had the exact same problem, so thanks for the stackoverflow link :)

you are welcome. Glad it worked :)

could you help out by running this? I'm getting some errors (like AWS :command not found). Any totarial vids ?

Nice project Joseph. One concern though. I've been able to run the containers and logged in to metabase however the actual data is missing from the database. The database shows in metabase but the data itself is missing. What could be wrong?

Very informative/useful, thanks for the content.

Hi! This is my first project of this type and I must have missed something. I get the following error when running the

make infra-upcommand:terraform -chdir=./terraform apply ╷ │ Error: error configuring Terraform AWS Provider: failed to get shared config profile, default │ │ with provider["registry.terraform.io/hashicorp/aws"], │ on main.tf line 12, in provider "aws": │ 12: provider "aws" { │ ╵ make: *** [Makefile:42: infra-up] Error 1

Hey, Did you get a chance to run

make tf-init, if Yes, what was the response? Also do you have aws cli installed ?When I run

make tf-initI get:`terraform -chdir=./terraform init

Initializing the backend...

Initializing provider plugins...

Terraform has been successfully initialized!`

AWS-CLI version:

aws-cli/1.18.69 Python/3.8.10 Linux/5.15.0-53-generic botocore/1.16.19Hmm Can you try removing this line https://github.com/josephmachado/bitcoinMonitor/blob/b073e31cf5072117787296b4f05f3afb84ac1ab6/terraform/main.tf#L14 and trying again?

It seems to be some version issue https://discuss.hashicorp.com/t/error-error-configuring-terraform-aws-provider-failed-to-get-shared-config-profile-default/39417/3

If you are using Windows can you try this https://github.com/hashicorp/terraform-provider-aws/issues/15361#issuecomment-699700674 ?

Please LMK if this helps.

Thanks! I removed this line earlier, installed a new version of aws-cli. Indeed, it looks like a version issue. I need to delve into it:

https://discuss.hashicorp.com/t/error-configuring-terraform-aws-provider-no-valid-credential-sources-for-terraform-aws-provider-found/35708 & what needs to be changed to resolve this https://registry.terraform.io/providers/hashicorp/aws/latest/docs/guides/version-4-upgrade#changes-to-authentication

the reply to

make tf-initwas:terraform -chdir=./terraform init

Initializing the backend...

Initializing provider plugins...

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see any changes that are required for your infrastructure. All Terraform commands should now work.

If you ever set or change modules or backend configuration for Terraform, rerun this command to reinitialize your working directory. If you forget, other commands will detect it and remind you to do so if necessary.

Aws-cli version:

aws-cli/1.18.69 Python/3.8.10 Linux/5.15.0-53-generic botocore/1.16.19

Hi! I have a problem with the following line when calling the

make cloud-metabasecommand:The line in the Makefile that the error points to:

I removed the double dollar signs (

$), and only insert one and now it seems ok. However, when I did it, the following problem occurred (make cloud-metabase):The double $$ sign executes a command. Are you still having issues? Do you mind opening a Github Issue at https://github.com/josephmachado/bitcoinMonitor/issues its easier to track there.

Learned a lot. Thanks!!

Hi Joseph, when I run "make up" command, it throws the following error:

What could be the cause of this error?

had the same error.

apparently there were 'carriage returns' that cron can't process. This might be due to me using Windows / WSL?

Anyways these links helped: https://stackoverflow.com/questions/65790304/cron-bad-minute-errors-in-crontab-file-cant-install https://knowledge.digicert.com/solution/vmc-convert-cr-lf-to-lf-before-submitting-svg

TY for reporting! I use mac, so unable to test on windows atm.

When I try and run

make upthe following error occurs: "Error response from daemon: Conflict. The container name "/dashboard" is already in use by container "961d9b2f11ad17b8e048ec631a4a60d9cfceab07839d54e2b70344c946cda5bd". You have to remove (or rename) that container to be able to reuse that name. make: *** [up] Error 1"Could you try

docker stop 961d9b2f11ad17b8e048ec631a4a60d9cfceab07839d54e2b70344c946cda5bd && docker rm 961d9b2f11ad17b8e048ec631a4a60d9cfceab07839d54e2b70344c946cda5bdand try themake upcommand again? Please LMK if this helps.Joseph, I'm curious if there are specific reasons why you prefixed "1-" to the "1-setup-exchange.sql" scripts? Also, is there a reason you created a 'bitcoinmonitor' directory to house the utils and other .py scripts, instead of just having it in "src"?

Those sql scripts are executed when our warehouse database starts, see this, its a postgres docker feature.

The naming conventions is usually 1-name of script, 2-name of script, etc since the postgres container will execute them in alphabetical order and this is a way to control order of script execution. In this case since we only have one script it does not matter.

I like the structure project_name/src/application_name. This way I can have multiple application as needed. In this case there is only one application.

Hope this helps. LMK if you have more questions :)

Got it, that makes sense, thank you tremendously! One part I was curious on was what you would recommend as the best-practice for adding in more data (from other endpoints)?

My thought process was to:

Does that make sense or would you approach it differently?

Hey Joseph, fantastic tutorial and framework for projects! I have a quick question: when I first ran "make up" to test locally, metabase @ :3000 seemed to work fine, but when I closed it via "make down" and tried to get it back up the localhost:3000 doesn't refresh or load at all. When I run "curl http://localhost:3000/api/health" it states "Empty reply from server".

I'm using MacOS with an M1 chip and I'm wondering if its a mac issue - any suggestions?

Hi, Thank you for the kind words. If I am understanding correctly, the issue is that localhost:3000 (metabase) does not seem to run if you restart it (using

make up && make down && make up)? It usually take about a min for metabase to come up, can you try hitting it after a min. Also I'm not familiar with its health API, did a quick search on their docs and couldn't find them, can you provide a link, I can take a look at this issue.No worries, I attribute it to some fault of my own but it works fine when deploying it to prod, appreciate you

Appreciate the fine walkthrough Joseph. I am just having a bit of a challenge making this work with a different API. The framework is exactly the same except I have changed the .SQL to create a slightly different table, as well as modified the exchange_data_etl.py where the data still creates an output in the same format as yours but with different data. The table is created correctly in Metabase but the data never loads.

Could it be something I need to alter in the postgres image? Or if you have any other troubleshooting tips that would be grand.

Thanks again!

hi, The main reason is usually that the data type/number of columns that your script sends do not match the existing table. There are 2 ways to test this

more interactive way is to sh into the container and run the script and you will see the stack trace. You can sh into the container using

make shellin the project directory. See this for detailsThe other option is to run the ETL and see the docker logs. Run

docker psto get the pipelinerunner docker id, then rundocker logs <your-contianer-id>to check the error logs from the script.I'd start with option 1 as it is the easiest to test out.

LMK if this helps or if you have more questions.

Nice project! I'm current working through this myself to understand what's going on.

Regarding the t2.micro type for EC2, is this really enough? I set my Docker container running on this instance type and eventually lost connection (and still can't reconnect). From looking close, I believe the free tier instances only have 1 Gb memory.

Hi Aaron, Are you using the exact AMI as shown in https://www.startdataengineering.com/post/data-engineering-project-to-impress-hiring-managers/#6-deploy-to-production ? I was able to get my docker containers running. You can also use the

screencommand which will help prevent the ssh connection from dying. Hope this helps. LMK if you are still facing issues.docker exec pipelinerunner yoyo develop --no-config-file --database postgres://postgres:1234@localhost:5432/postgres ./migrations Traceback (most recent call last): File "/usr/local/bin/yoyo", line 8, in sys.exit(main()) File "/usr/local/lib/python3.9/site-packages/yoyo/scripts/main.py", line 304, in main args.func(args, config) File "/usr/local/lib/python3.9/site-packages/yoyo/scripts/migrate.py", line 284, in develop backend = get_backend(args, config) File "/usr/local/lib/python3.9/site-packages/yoyo/scripts/main.py", line 266, in get_backend return connections.get_backend(dburi, migration_table) File "/usr/local/lib/python3.9/site-packages/yoyo/connections.py", line 79, in get_backend backend = backend_class(parsed, migration_table) File "/usr/local/lib/python3.9/site-packages/yoyo/backends/base.py", line 166, in init self._connection = self.connect(dburi) File "/usr/local/lib/python3.9/site-packages/yoyo/backends/core/postgresql.py", line 41, in connect return self.driver.connect(**kwargs) File "/usr/local/lib/python3.9/site-packages/psycopg2/init.py", line 122, in connect conn = _connect(dsn, connection_factory=connection_factory, **kwasync) psycopg2.OperationalError: could not connect to server: Connection refused Is the server running on host "localhost" (127.0.0.1) and accepting TCP/IP connections on port 5432? could not connect to server: Cannot assign requested address Is the server running on host "localhost" (::1) and accepting TCP/IP connections on port 5432?

make: *** [Makefile:57: warehouse-migration] Error 1 I am getting this eeror when I run the make up command I have checked my postgre credentials they are correct

Can you please run

docker psand paste theoutput here. It looks like the container isn't running or port isnt open.Thanks for this project, quite detailed. Please how do you schedule the cron job? I can't see it in the make file?

You are welcome The schedule is defined here https://github.com/josephmachado/bitcoinMonitor/blob/main/scheduler/pull_bitcoin_exchange_info

And is copied into the docker file here https://github.com/josephmachado/bitcoinMonitor/blob/2ef436995a2bd1be626aeb52ecd89a708e041f74/containers/pipelinerunner/Dockerfile#L19

And the cron is started here https://github.com/josephmachado/bitcoinMonitor/blob/2ef436995a2bd1be626aeb52ecd89a708e041f74/containers/pipelinerunner/Dockerfile#L31

I need to write an article about setting up docker