Setting up a local development environment for python data projects using Docker

- 1. Introduction

- 2. Set up

- 3. Reproducibility

- 4. Developer ergonomics

- 5. Conclusion

- 6. Further reading

- 7. References

1. Introduction

Data systems usually involve multiple systems, which makes local development challenging. If you have struggled with

Setting up a local development environment for your data projects

Trying to avoid the “this works on my computer” issue

Then this post is for you. In this post, we go over setting up a local development environment using Docker. By the end of this post, you will have the skills to design and set up a local development environment for your data projects.

2. Set up

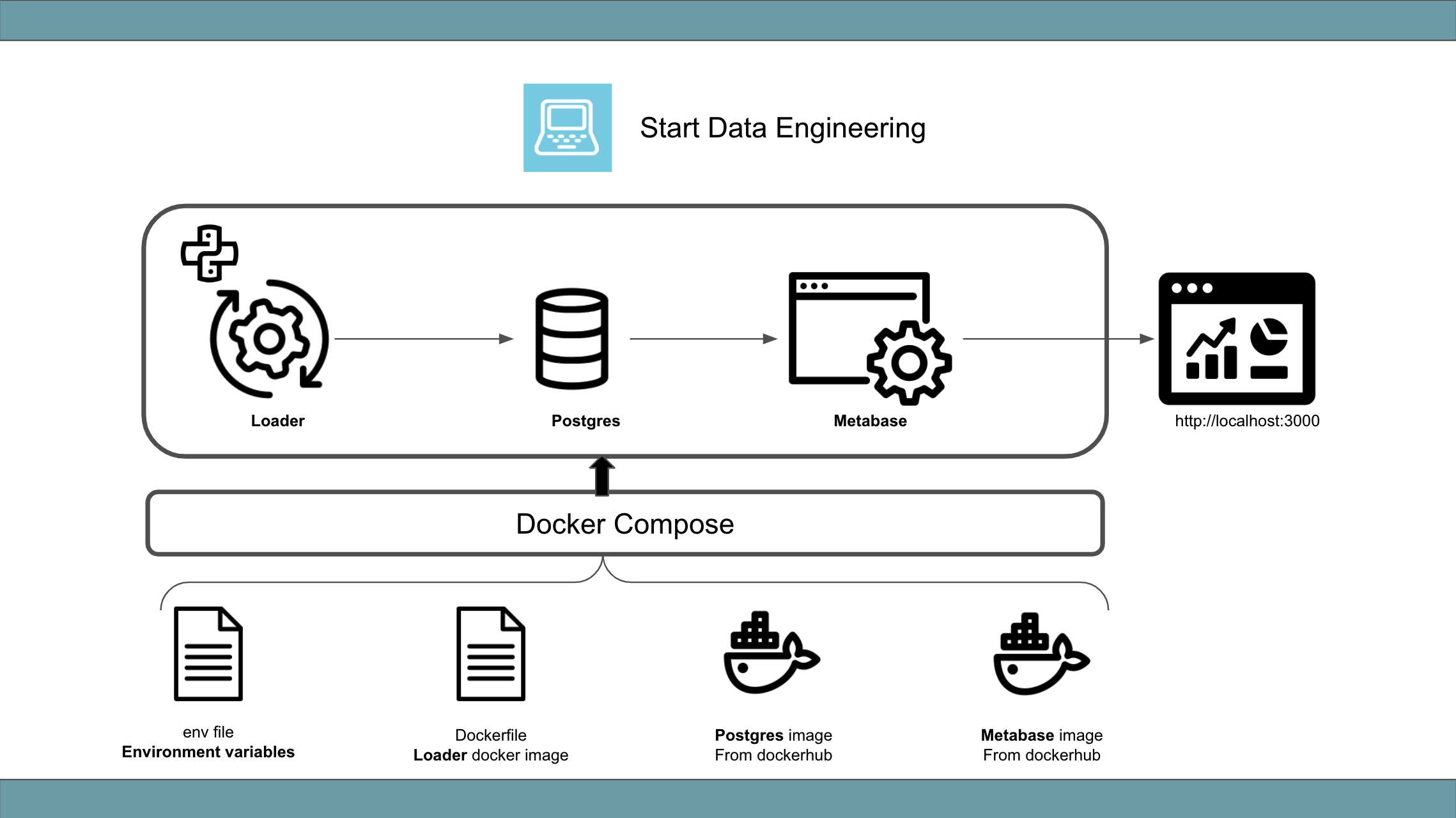

Our project involves a python process loading data into a warehouse as shown below.

To run the code, you will need

- Docker and Docker Compose

- git

Clone the git repo and run the ETL as shown below.

git clone https://github.com/josephmachado/local_dev.git

cd local_dev

make up

make ci # run tests and format code

make run-etl # run the ETL process

Now you can log into the warehouse DB using make warehouse and see the results of the ETL run, as shown below.

select * from housing.user;

-- You will see ten records

\q # quit

You can log into the Metabase dashboard by going to localhost:3000

. You can stop the Docker containers using the make down command.

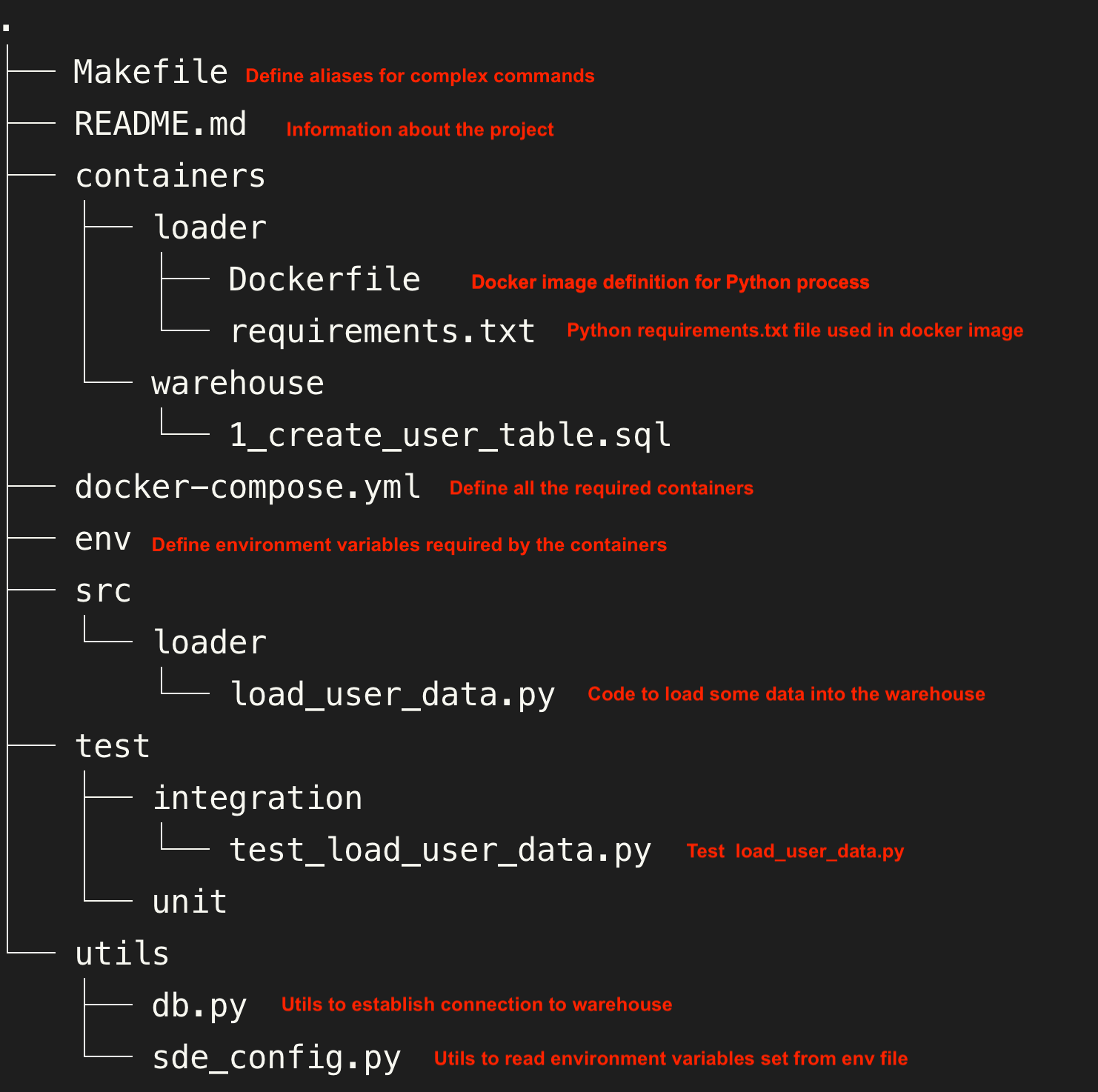

The project structure is as shown below.

3. Reproducibility

3.1. Docker

Docker provides a way to have a similar development environment between local and production systems.

Docker’s main concepts are

Image: An OS template with your desired settings.Container: The images run within containers.

There are two types of images.

Prebuilt image: These are the images at dockerhub . Most software has its official image. Note that anyone can create and upload an image to the Docker hub.Custom image: One can also build an image to fit their specific requirements. These images can be built from scratch or built on top of existing images .

Our project uses the official images for Postgres and Metabase and a custom image to run python and load data into Postgres.

We defined our custom image at ./local_dev/containers/loader/Dockerfile . We can build images and run them on containers as shown below.

docker build ./containers/loader/ -t loader # build image from file named Dockerfile in path /containers/loader/

docker run --name local_loader -d loader # run our image on a container

docker ps # check the running container

docker run -d loader sleep 100 # Run

docker ps # you'll see 2 running container

# one will stop running in 100 seconds

docker exec -ti local_loader echo 'Running on local_loader container' # Exec, will run command on an existing container

# stop container

docker stop local_loader

docker rm local_loader

Command definitions

build: Used to build an image from a docker file.run: Used to run the image within a container. By default, the command defined within the CMD in the Dockerfile will be used. You can override this by specifying any command (e.g.sleep 100).exec: Used to execute a command on an already running container.stop & rm: Used to stop a container and remove a container.

The steps used to build the docker image are in the Dockerfile . They are executed in the order that they are specified. The main commands are shown below.

FROM: Used to specify the base image. We are using python image, version 3.9.5.WORKDIR: Used to specify the directory from which commands will be run/executed.ENV: Used to set the environment variables for our docker image.COPY: Used to copy data from our local file system into the docker image. We copy our pythonrequirements.txtinto our docker image.RUN: Used to execute commands when building the docker image.CMD: Used to specify the default command to be run, when this image is used in a container.ENTRYPOINT: Used to specify a shell script to be executed each time a container is created using this image. The default entry point is/bin/sh.EXPOSE: Used to specify a port to expose to your local machine. By default, no ports are exposed.

ENTRYPOINT and CMD may seem very similar, but they are different. The ENTRYPOINT script is always executed when starting a new container, but CMD can be overridden when needed.

3.2. Docker Compose

Docker-compose provides a way to spin up different containers using a single YAML file.

docker compose --env-file env up --build -d # spin up services defined in docker-compose.yml

docker ps # you will see 3 containers

docker compose --env-file env down # spin down all the services

Let’s look at the different parts of the docker-compose file.

networks: Networks allow Docker containers to communicate amongst themselves. All the containers started using a docker-compose file will have a default network.services: Specifies all the containers to be started using the docker-compose.yml file.image: Used to specify image name from docker hub or in combination with thebuildsection to define our image definition (Dockerfile).build: Used to specify the location of a custom image definition. Thecontextspecifies the directory where the image definition lives.container name: Used to specify a name for the container.environment: Used to specify environment variables when starting a container. We use${VAR_NAME}as the value as theVAR_NAMEwill be pulled from env file specified with--env-file env.volumes: Used to sync files between our local file system and the docker container. Any change made is reflected in our local filesystem and the files within the docker container.ports: Used to specify which ports to open to our local machine. The5432:5432port mapping in the Postgres container mapshostPort:containerPort. We can now reach the Postgres container via the host port, 5432.

4. Developer ergonomics

4.1. Formatting and testing

Having consistent formatting and linting helps with keeping the code base clean. We use the following modules.

We also use pytest

to run all the tests inside the ./test folder. See Makefile

for the commands to run these.

4.2. Makefile

Makefile allows us to use shortcuts instead of typing out long commands and makes working with the tools simpler. One can define a command in the Makefile and invoke it using the make command syntax.

Take a look at our Makefile . We can spin up all the containers, run formatting, checks, and tests, and spin down the containers as shown below.

make up

make ci # run tests and format code

make down

5. Conclusion

Hope this article gives you a good understanding of setting up your local development environment. To recap, we saw

- Creating docker images

- Using docker compose to spin up multiple docker containers

- Automating static type checks, formatting, lint checks, and testing

- Using Makefile to make running complex commands simpler

The next time you are setting up a data project or want to improve your team’s development velocity, set up reproducible environments while focussing on developer ergonomics using the techniques shown above.

If you have any questions or comments, please leave them in the comment section below.

6. Further reading

- Choosing the image for your data components

- Adding tests to data pipelines

- Adding CI test

- Curious how the postgres user table gets created?, see the Initializaion script section in this page

7. References

If you found this article helpful, share it with a friend or colleague using one of the socials below!

"Curious how the postgres user table gets created?, see the Initializaion script section in this page"

Does the creation of the database happen because there is a *.sql file in local_dev/containers/warehouse/ and that in the /yml file, you included : " volumes: - ./containers/warehouse:/docker-entrypoint-initdb.d " ?

I am thinking this is why (from Docker Site)

"If you would like to do additional initialization in an image derived from this one, add one or more *.sql, *.sql.gz, or *.sh scripts under /docker-entrypoint-initdb.d (creating the directory if necessary). After the entrypoint calls initdb to create the default postgres user and database, it will run any *.sql files, run any executable *.sh scripts, and source any non-executable *.sh scripts found in that directory to do further initialization before starting the service. "

And since everything is docker compose .... in the MAKEFILE, it would include the above.

Do you have to run: make warehouse

in order to get the warehouse up?

That is correct! Postgres official docker containers offers a functionality where any script placed within the dockers

docker-entrypoint-initdb.dis run when we start the docker container. We use docker volume mounts to tell the postgres container to copy the setup sql scripts to its docker-entrypoint-initdb.d location (https://github.com/josephmachado/local_dev/blob/aa167edc411fd5e61f97374515dfa95b1b7c8b03/docker-compose.yml#L12)No we do not have to run

make warehouseto run the setup script, since when we create the docker containers the setup scripts will be executed. Themake warehousecommand simply connects to an already setup postgres container.Hope this helps. LMK if you have any questions.

It took a lot but I was able to run everything from my windows computer but what Im supposed to look at in Metabase? It asks me to create a profile and then everything is empty in it... I wish you made youtube videos for these tutorials

Hi NF N, The post helps you set up a local dev environment. You can create a user profile (with a random email) for Metabase with these connection credentials https://github.com/josephmachado/local_dev/blob/main/env and be able to connect to the warehouse. Hope it helps. LMK if you have more question,

is it possible for you to add windows command how to use make because make is not working in windows.

Hi Shashidhar, You will need to install cygwin and then install make on cygwin.

Hope this helps.

Quick question: Is it possible to run the 'load_user_data.py' locally instead of via docker (with the postgres db deployed to docker)?

I attempted it but am a little stuck with a few errors like:

Would you have a recommendation on how to run the load script locally to insert data into the docker postgres db?

You can, so the

load_user_data.pyfile get the postgres credentials using this file, which use the env variables set in the container. These are the connection variables . You can hard code the connection variable in the sde_config.py and run load_user_data.py. Hope this helps. LMK if you have any questions.Im stuck with trying to make warehouse.

docker exec -ti warehouse psql postgres://sdeuser:sdepassword1234@localhost:5432/warehouse the input device is not a TTY. If you are using mintty, try prefixing the command with 'winpty' make: *** [Makefile:14: warehouse] Error 1

hi, Could you run the command

docker psand check to see that the warehouse container is running?I've got the following error, can someone help?

Warehouse container: FATAL: database "sdeuser" does not exist

I've restarted all containers and removed the volumes but still the database throws the same error

Maybe it has something to do with the initialization scripts? somehow the postgres images really wants to do something with the "sdeuser" database. It can happen that the image uses the Username for the database name, but since my env-file seems to work correctly I'm not sure why the image is defaulting to the username.

Also when I'm in Metabase I cannot find the warehouse if I type in "localhost" as host and "5432" as port