How to update millions of records in MySQL?

- Introduction

- Setup

- Problems with a single large update

- Updating in batches

- Conclusion

- Further reading

Introduction

When updating a large number of records in an OLTP database, such as MySQL, you have to be mindful about locking the records. If those records are locked, they will not be editable(update or delete) by other transactions on your database. One common approach used to update a large number of records is to run multiple smaller update in batches. This way, only the records that are being updated at any point are locked.

If you are wondering

How to update millions of records without significantly impacting user experience ?

How does the update command lock records ?

Then this post is for you. Note that this is not the only way. There are other approaches such as swapping tables, running a standard update depending on your transaction isolation levels, etc. The usages of these approaches depend on your use case. For our use case, let’s assume we are updating a user table which, if locked for a significant amount of time (say > 10s), can significantly impact our user experience and is not ideal.

Setup

We are going to be using docker to run a MySQL container and python faker library to generate fake data.

Let’s set up a project folder and generate some fake user data.

mkdir lock_update && cd lock_update

Create a fake data generator python script named gen_fake.py.

#!/usr/bin/env python3

import argparse

import random

from faker import Faker

def gen_user_data(file_name: str, num_records: int, seed: int = 1) -> None:

fake = Faker("en_US")

with open(file_name, "w") as file1:

for i in range(1, num_records + 1):

file1.write(

f"{seed + i},{fake.name()},{random.randint(0,1)},{fake.state()},{fake.country()}\n"

)

if __name__ == "__main__":

parser = argparse.ArgumentParser(description="Generate some fake data")

parser.add_argument(

"--file-name",

type=str,

default="fake_user.csv",

help="file name to store fake data",

)

parser.add_argument(

"--num-records", type=int, default=100, help="Num of records to generate"

)

parser.add_argument("--seed", type=int, default=0, help="seed")

args = parser.parse_args()

gen_user_data(

file_name=args.file_name, num_records=args.num_records, seed=args.seed

)

The gen_fake.py script generates fake data of the format id,name,is_active flag,state,country per row. Grant permissions to the fake data generator and generate 10 million rows as shown below.

chmod u+rwx gen_fake.py

# run 5 parallel processes, each generating 2million records as shown below

./gen_fake.py --file-name user_data_1.csv --num-records 2000000 --seed 0 & ./gen_fake.py --file-name user_data_2.csv --num-records 2000000 --seed 2000000 & ./gen_fake.py --file-name user_data_3.csv --num-records 2000000 --seed 4000000 & ./gen_fake.py --file-name user_data_4.csv --num-records 2000000 --seed 6000000 & ./gen_fake.py --file-name user_data_5.csv --num-records 2000000 --seed 8000000

mkdir data

cat user_data_1.csv user_data_2.csv user_data_3.csv user_data_4.csv user_data_5.csv >> ./data/user_data_fin.csv # combine data

rm user_data_1* user_data_2* user_data_3* user_data_4* user_data_5*

Let’s start a MySQL container with the data we generated above and log into it.

docker run -d -p 3306:3306 --name mysql-updates -v "$(pwd)"/data:/var/lib/data -e MYSQL_ROOT_PASSWORD=Password1234 mysql:8.0

docker exec -it mysql-updates bash # open docker shell

mysql -h 127.0.0.1 -P 3306 -u root --local-infile=1 -p # password is Password1234

Create a database called updates and a simple user table. Insert the data generated into the table as shown below.

create database updates;

use updates;

drop table if exists user;

CREATE TABLE user (

user_id int,

name varchar(100),

is_active boolean,

st varchar(100),

country varchar(100),

PRIMARY KEY (user_id)

);

SET GLOBAL local_infile=1;

LOAD DATA LOCAL INFILE '/var/lib/data/user_data_fin.csv' INTO TABLE user FIELDS TERMINATED BY ',';

-- time taken to load 1 min 22.36 sec

select count(*) from user;

-- should be 10 million

Problems with a single large update

Let’s say we work for an e-commerce website. A bug made it to production and now we have st(state) field set incorrectly for users with ids between 3 million(3000000) and 8 million(8000000).

We have to update 5 million records out of a total of 10 million records to have the st value set to NY. A constraint we are working with is to keep downtime for users as small as possible. If you are a user and your record is locked you will not be able to modify your data. Let’s see how user experience may be affected by a large update holding a lock on their records.

In your sql terminal run this update command.

update user set st = 'NY' where user_id between 3000000 and 8000000;

-- 2 min 13.46 sec

Simultaneously, in another sql terminal, try to update a user record that is locked by the above update. We use the where user_id = 3300000 to select a row that is locked by the above update.

docker exec -it mysql-updates bash

mysql -h 127.0.0.1 -P 3306 -u root -p # password is Password1234

update updates.user set name = 'Amos Burton' where user_id = 3300000;

-- ERROR 1205 (HY000): Lock wait timeout exceeded; try restarting transaction

This error happens because our large update has locked the record with user_id = 3300000 and this prevents any other transactions from modifying the locked data.

The second update will wait by default 50 seconds (set as innodb_lock_wait_timeout) before timing out. You can check this setting using the below query

show variables like 'innodb_lock_wait_timeout';

What if instead of updating 5 million records in one update statement, we only updated a few user records at a time ? This way, we can keep the number of records being locked at any given time small and reduce the wait time for other updating transactions.

Updating in batches

Let’s run updates in batches of 50,000 records. Let’s see how we can update our user table in chunks.

In our user table, we have a primary column which is a monotonically increasing id. We can use this to chunk our updates into batches of 50,000 user records. Let’s assume we want to set the st record to NJ this time.

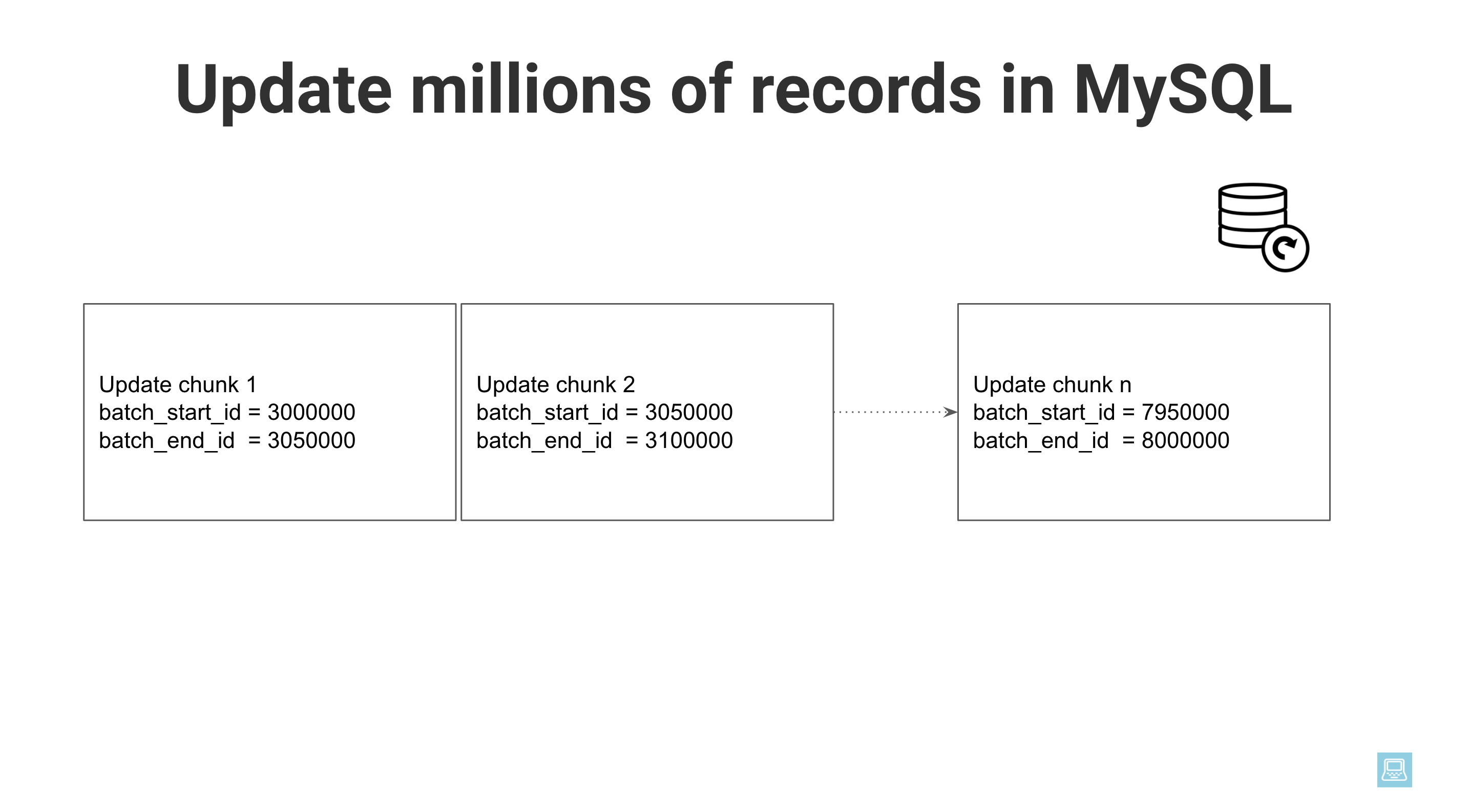

In order to run updates in a while loop we need to create a stored procedure to encapsulate this logic. The looping logic is straightforward. We create a sliding window defined by starting id of batch_start_id and ending id of batch_end_id which gets moved up by batch_size for each run.

Note that we use the DELIMITER $$ to set the statement delimiter to $$. This allows us to use the default MySQL delimiter ; within the stored procedure definition. You can see that after the END$$ command we set the delimiter back to ;.

USE updates;

DROP PROCEDURE IF EXISTS BatchUpdate;

DELIMITER $$

CREATE PROCEDURE BatchUpdate(

start_id INT,

end_id INT,

batch_size INT)

BEGIN

DECLARE batch_start_id INT DEFAULT start_id;

DECLARE batch_end_id INT DEFAULT start_id + batch_size;

DECLARE loop_counter INT DEFAULT 0;

WHILE batch_end_id <= end_id DO

SELECT CONCAT('UPDATING FROM ', batch_start_id, ' TO: ', batch_end_id) as log;

UPDATE user SET st = 'NJ' WHERE user_id BETWEEN batch_start_id and batch_end_id;

SET batch_start_id = batch_start_id + batch_size;

SET batch_end_id = batch_end_id + batch_size;

SET loop_counter = loop_counter + 1;

END WHILE;

SELECT CONCAT('UPDATING FROM ', batch_end_id - batch_size, ' TO: ', end_id) as log, loop_counter;

UPDATE user SET is_active = true WHERE user_id BETWEEN (batch_end_id - batch_size) and end_id;

END$$

DELIMITER ;

call BatchUpdate(3000000, 8000000, 50000);

-- takes a total of 4 min 43.49 sec

The last update statement outside the while loop is used to update records that were missed by the loop. We also have some log statements to print out the chunks that are currently being updated. Since MySQL between is inclusive we are re-updating the batch_end_id of the previous chunk. Be mindful of this for your logic.

While the above BatchUpdate is running, run an update in another sql client.

docker exec -it mysql-updates bash

mysql -h 127.0.0.1 -P 3306 -u root -p # password is Password1234

update updates.user set name = 'James Holden' where user_id = 3300000;

exit

This update executes fast. Although the large update takes longer since we are doing it in batches, we can see how this approach enables other transactions to edit the data.

Conclusion

You can tear down your docker containers using the following commands.

docker stop mysql-updates

docker rm -f mysql-updates

To recap, we saw

- how a big update can lock other updates and delete operations and cause a

Lock wait timeouterror. - how updating in smaller batches execute faster per batch and thus locks will only have to wait a few seconds, if any.

An important point to note here is the order of updates. When running batch updates and single updates on the same column of a record the latest one will be applied. You can also do this batched updates in parallel, but you have to be careful not to run into any deadlocks.

If the update logic is more complex and your table has an index you, can use SELECT ... FOR UPDATE with SKIP LOCKED or NOWAIT features as shown here.

The next time you are doing a large update on a table, consider running the updates in chunks to keep other transactions from being affected by lock timeout errors.

Further reading

References:

Hello, does the stored procedure commit the update after each batch? or only after the last batch is executed? also i'm getting Error Code 2013 Lost connection to MySQL server during query when the batch size is small. TIA!

The error message is "Error Code 2013 Lost connection to MySQL server during query"