How to unit test sql transforms in dbt

Introduction

With the recent advancements in data warehouses and tools like dbt most transformations(T of ELT) are being done directly in the data warehouse. While this provides a lot of functionality out of the box, it gets tricky when you want to test your sql code locally before deploying to production. If you have wondered

how do I implement unit test on sql transforms ?

How do I create the test-data to test sql logic ?

What is an easy and dependable way to test my sql transforms before deploying my code ?

then, this post is for you. In this post we build on top of a very popular open source tool called dbt . We will see how we can run unit test against mock data locally. In software engineering, a unit test refers to testing a single piece of logic, be it encapsulated in a function or method.

In the context of sql testing, we defined a unit as a single model(which is a sql select statement in dbt). If you are unfamiliar with how dbt works, it is recommended to read this dbt tutorial

before proceeding.

Setup

If you would like to follow along you will need

We will build on a simple ELT pipeline using dbt and Postgres. In real projects you usually have a local environment where you develop and a stage and a production environment. Production environment will have the data used by the end users. For this example we will assume that

- the local environment where you develop is called

dev - the data used by end users are in the

prodenvironment.

Code

You can clone the repository, setup a dev and prod data warehouse using the setup_script.sh as shown below.

git clone https://github.com/josephmachado/unit_test_dbt.git && cd unit_test_dbt

chmod u+rwx setup_script.sh tear_down_script.sh run_dbt.sh # permissions to execute script

export PGPASSWORD=password # set password as env variable to not have to type it again

./setup_script.sh

The setup_script.sh creates dev and prod data warehouses, creates startdataengg.raw_greeting_events table in both environments and loads some data for that table in prod.

This ELT pipeline transforms a base_greeting_events table that is already present in the data warehouse into a usable fct_greeting_events table.

Let’s say we want to test if the sql transformation from base_greeting_events to fct_greeting_events is correct. dbt tests for data correctness after the data has been processed and this would be not ideal in a production environment. We want to test for logic correctness locally using a mock input and comparing it against an expected mock output.

We can do this using

- A conditional logic that uses mock input instead of data from

base_greeting_eventsonly in dev environment. - Writing a custom macro to check if two sets of data are the same. In our case we will use this to check the mock inputs and mock outputs equality.

Conditional logic to read from mock input

In the model file at models/staging/stg_greeting_events.sql you can see that we have a logic which selects the input model based on the environment.

with source as (

{% if target.name == 'dev' %}

select * from {{ ref('input_base_greeting_events') }}

{% else %}

select * from {{ ref('base_greeting_events') }}

{% endif %}

),

--select statement

When running dbt in dev environment we use the model input_base_greeting_events which is the mock input present in the location data/input_base_greeting_events.csv. In any other environment we use the table base_greeting_events.

Custom macro to test for equality

We can write custom sql queries and reuse them across our project. These custom queries are called macros. There are 2 main types of tests in dbt, they are

- Schema tests: Queries which return the number 0 to pass else fails.

- Data test: Queries that return 0 records to pass else fails.

We will write a custom Schema test which returns 0 when the models being compared are the same. You can see our macro in the location macros/test_equality.sql.

{% macro test_equality(model) %} -- macro definition

{% set compare_model = kwargs.get('compare_model') %} -- get compare_model input parameter

{% set env = kwargs.get('env') %} -- get env input parameter

{%- if target.name == env -%} -- check if env input parameter matches the current environment

select count(*) from ((select * from {{ model }} except select * from {{ compare_model }} ) union (select * from {{ compare_model }} except select * from {{ model }} )) tmp

{%- else -%}

select 0 -- if no input or different env return true

{%- endif -%}

{% endmacro %}

The jinja templates are a way to write conditional, loop and other control flow logic into sql. In the above script we

- Define a macro.

- Get compare_model and env input parameters.

- If env parameter is the current environment run the equality check sql query and return 0 or >0 which translates to true or false respectively.

- If not return true.

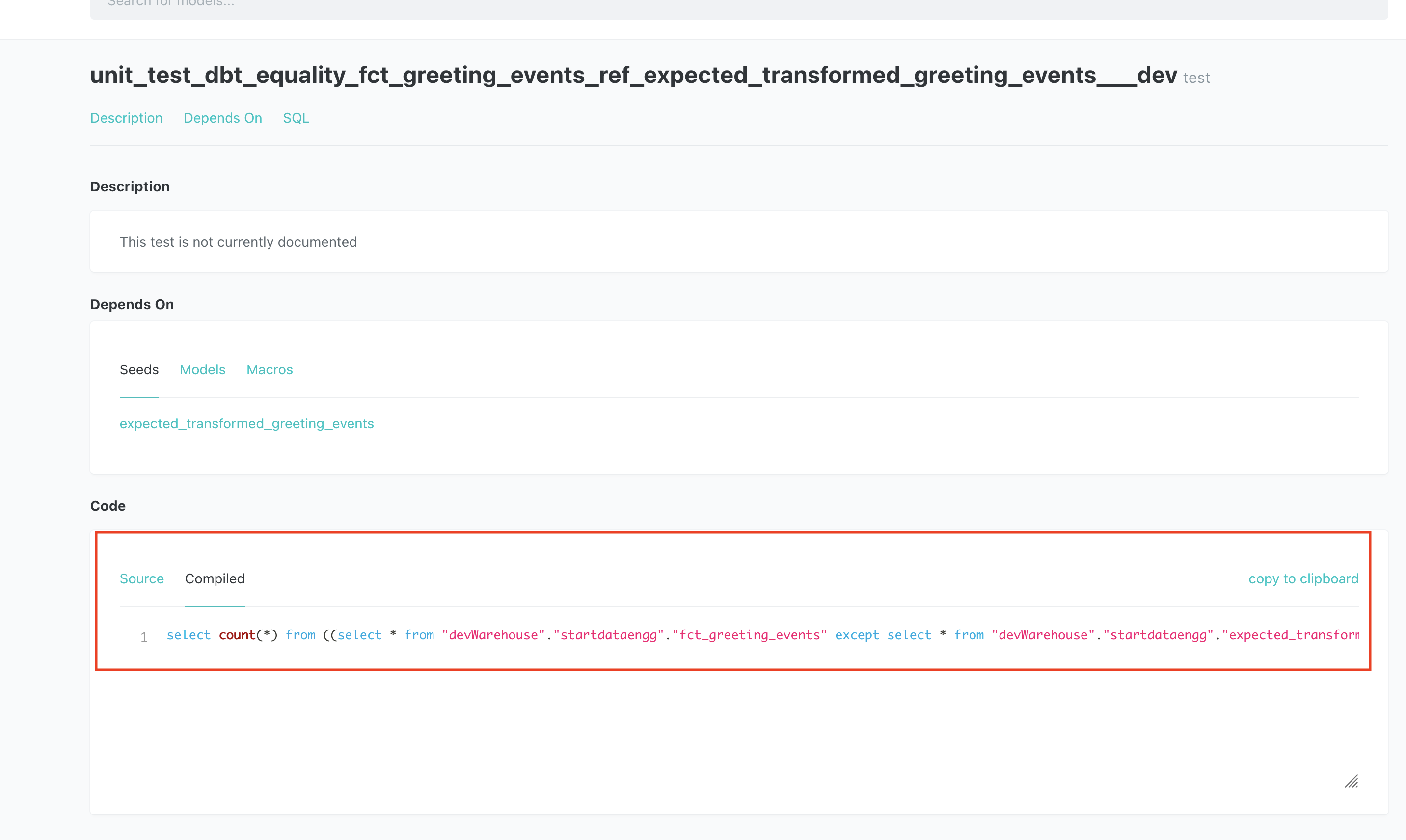

The equality sql script is a query to check if the models being compared are the same. The mock output is defined at data/expected_transformed_greeting_events.csv. The parsed sql script is shown below.

select count(*)

from (

(

select *

from devWarehouse.startdataengg.fct_greeting_events

except

select *

from devWarehouse.startdataengg.expected_transformed_greeting_events

)

union

(

select *

from devWarehouse.startdataengg.expected_transformed_greeting_events

except

select *

from devWarehouse.startdataengg.fct_greeting_events

)

) tmp

Setup environment specific test

In dbt the test are run after the models are materialized. The tests are defined in a .yml file. The equality test is defined at models/marts/schema.yml.

version: 2

models:

- name: fct_greeting_events

# other tests

tests:

- unit_test_dbt.equality:

compare_model: ref('expected_transformed_greeting_events')

env: dev

From the above snippet you can see that the equality macro is called with the input parameters compare_model: ref('expected_transformed_greeting_events') and env: dev.

Run ELT using dbt

You can run dbt for dev environment by running the script.

./run_dbt.sh

If you look at the run_dbt.sh script you can see that we run the following commands

- debug: to check if the connections work.

- seed: we use this to load in mock data from the data folder. We only run this for dev environment.

- run: to run the transformations.

- test: to run the tests defined in

schema.ymlfiles. - docs generate: to generate documentation for the UI.

- docs serve: start web-server to view the documentation and compiled sql scripts.

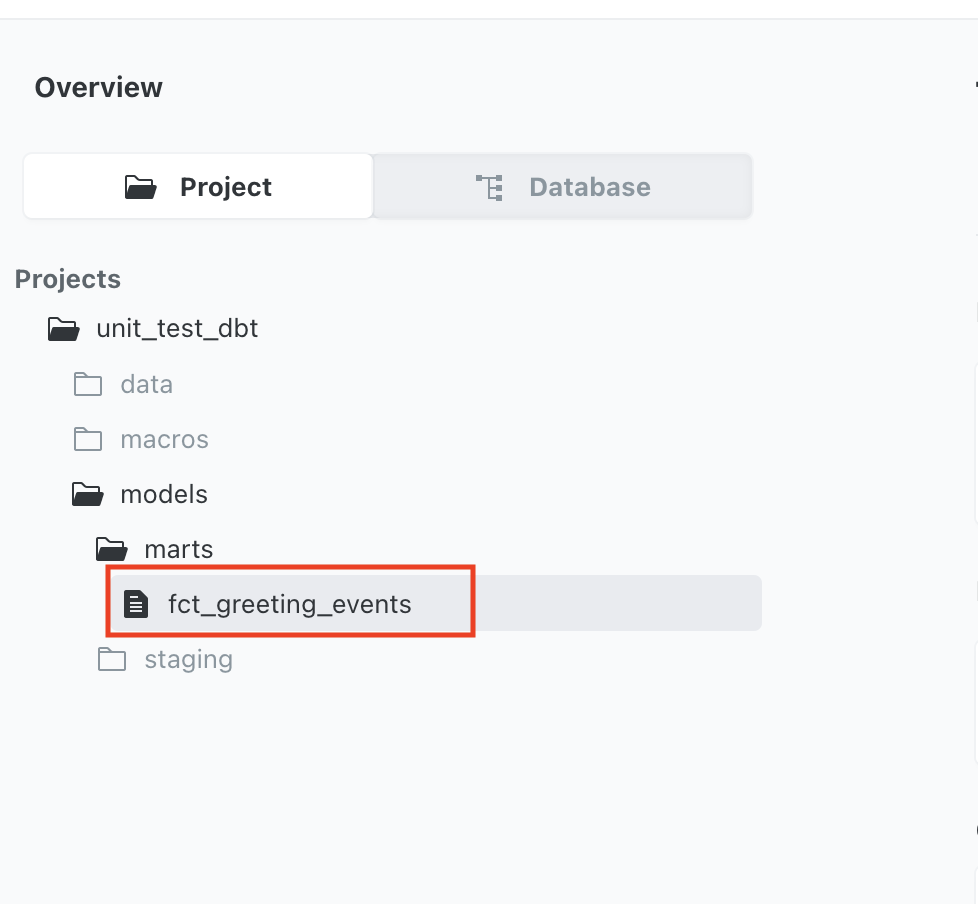

In the UI, if you go to fct_greeting_events and click on the data lineage icon on the bottom right, you will see that the base dataset used is input_base_greeting_events which is the mock input present in the location data/input_base_greeting_events.csv.

If you click on the unit_test_dbt_equality_fct_greeting_events_ref_expected_transformed_greeting_events___dev test in the fct_greeting_events UI, you can see the compiled sql used to check for equality.

Use ctrl+c to stop the web-server. You can run dbt in prod environment using

./run_dbt.sh prod

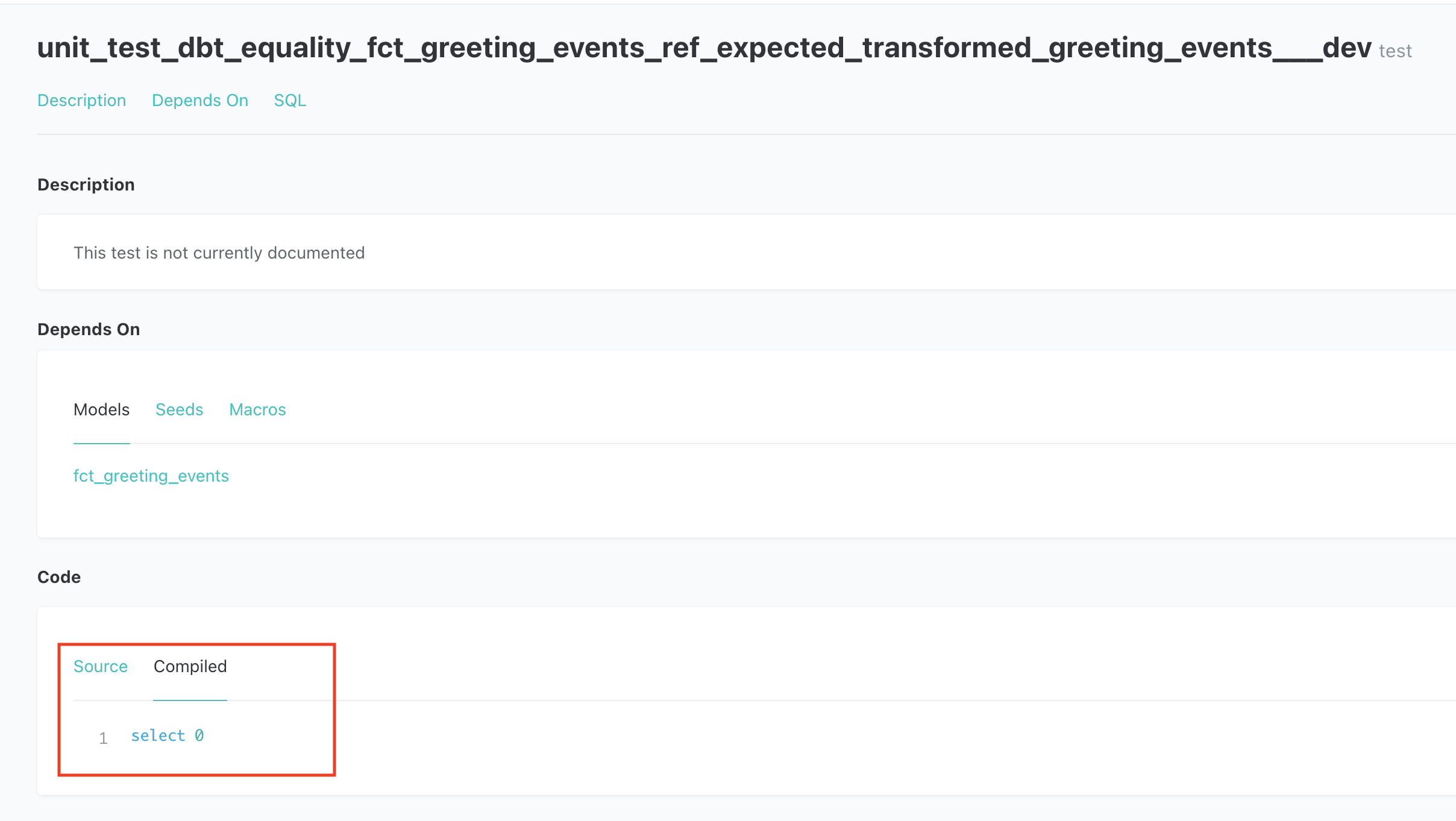

You will see that the base dataset used is base_greeting_events and the equality test sql is just select 0.

You can also use pre built packages available for dbt. One of the popular ones is dbt utils

, this package also has a equality test with better functionality, but is not env specific.

You can tear down the docker containers using

./tear_down_script.sh

Conclusion

Hope this article gives you a good idea of how to get started with unit testing sql transforms in dbt. This will help keep development cycles shorter and prevents unintended modification of production data. The next time you are writing a ELT pipeline in dbt consider writing a unit test case to test the sql script locally before deploying.

There are multiple approaches for unit testing sql, that you will encounter in the wild. A good approach would be to start with something small and evolve the testing pattern as your data testing needs and team size grows.

Please leave any questions or comments in the comment section below.

Further reading

References:

Thank you very much for this great tutorial I tried to follow it with dbt 0.20.0 and struggled all day until I figured out, that they changed the way schema tests work. now a test with select 0 fails because it still has one row. the solution i found was to put select 0 limit 0 in the else block and remove the count from the if block and now it works like a charm. thank you very much just leaving this here in case someone else has the same troubles as me

Thanks for the post.

With dbt version 1.4.5 the code is not working so I made some changes. such as:

config-version: 2tp dbt_project.ymlselect count(*) from ((select * from {{ model ...toselect * from ((select * from {{ model...and fromselect 0toselect 0 limit 0Finally I made a modification to use psql inside the docker container so you dont need to run the code on the local machine.